Exposing MCP from Legacy Java: Architecture Patterns That Actually Scale

How to integrate Model Context Protocol with Jakarta EE without breaking security, transactions, or your production systems

Large Language Models are no longer just consumers of public APIs. With the Model Context Protocol (MCP), they become first-class clients of enterprise systems. This creates a new architectural question that many organizations now face:

How do we expose MCP servers from legacy Jakarta EE applications without breaking everything we rely on today?

This article looks at that problem from an architect’s point of view. It does not assume greenfield systems. It assumes WildFly, Payara, WebLogic, SOAP endpoints, EJBs, shared databases, and real operational constraints.

The key insight is simple: MCP is not “just another API.”

It changes traffic patterns, trust boundaries, and scaling behavior. Treating it like REST leads to failure modes you already know too well.

MCP Changes the Shape of Integration

Traditional integrations assume:

Human-driven request rates

Predictable request shapes

Clear UI-to-backend boundaries

MCP breaks these assumptions.

An AI client can:

Invoke many tools in rapid succession

Call internal operations humans never directly touch

Generate load patterns that look like accidental denial-of-service

So before discussing implementation, we need to discuss where MCP lives in the system.

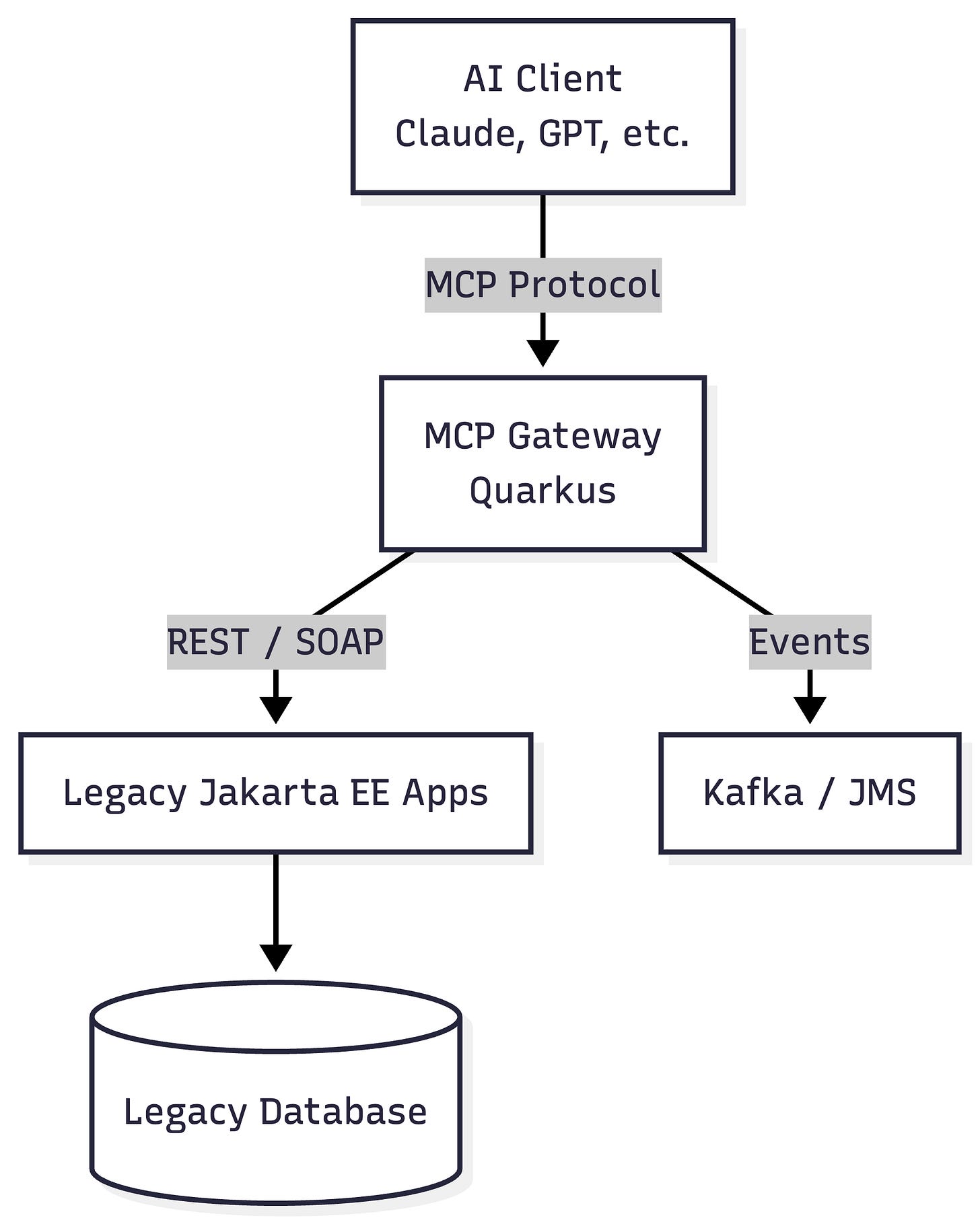

Pattern 1: MCP Gateway in Front of Legacy Systems

The most common and safest starting point is a dedicated MCP gateway implemented with a modern runtime such as Quarkus.

The gateway terminates the MCP protocol and translates MCP tool calls into legacy-friendly interactions. Quarkus is just one approach here though. You could also use Apache Camel or other approaches.

This pattern introduces a buffer zone between AI traffic and legacy systems.

Legacy applications remain unchanged

MCP evolves independently

Scaling AI traffic does not require scaling the legacy tier

Security policies are enforced before requests hit fragile systems

The Hidden Cost

You pay with duplication and indirection.

Semantic decisions often move into the gateway:

Which legacy calls to aggregate

Which fields matter to AI

How much data is “enough”

This is not wasted work, but it is real work. The gateway becomes a product, not a proxy.

Integration Styles Inside the Gateway

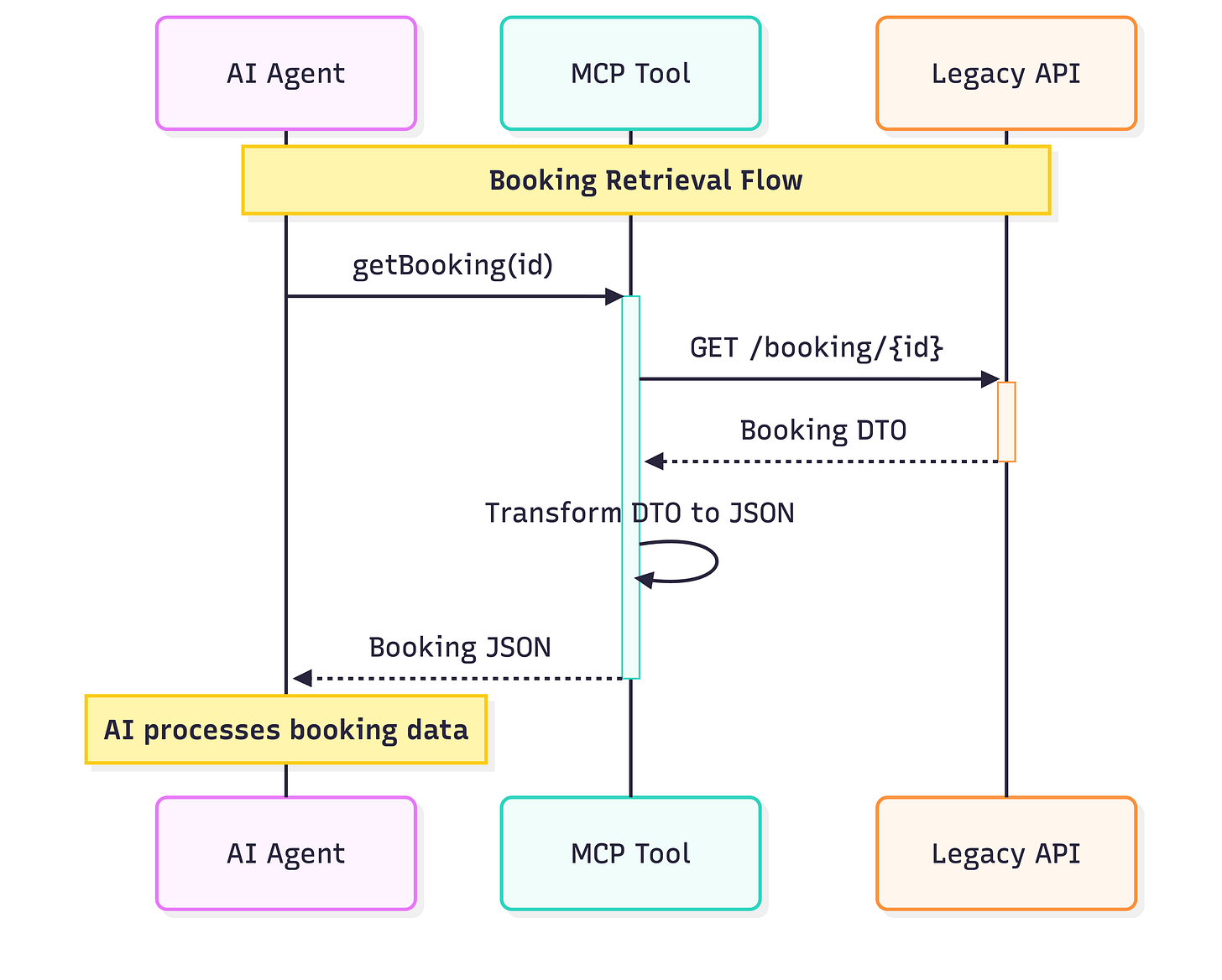

Direct Adapter (REST / SOAP)

The simplest option is a thin MCP tool mapped directly to an existing API.

This works, but it exposes every design mistake of the legacy API to the AI model. Chattiness becomes token waste. Inefficient APIs become expensive prompts.

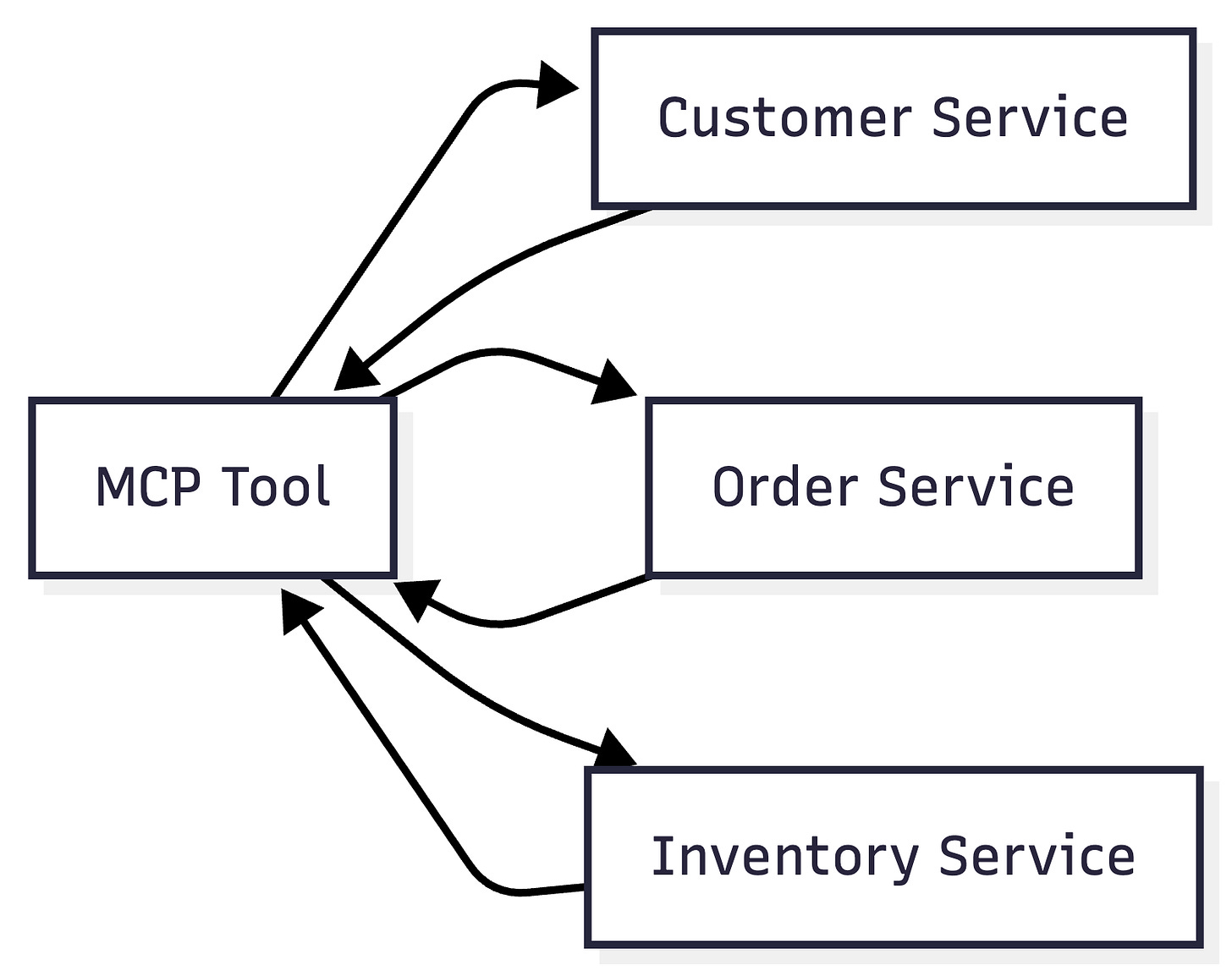

Semantic Aggregation Layer

A more sustainable option is to reshape legacy data into AI-friendly structures.

Now one MCP tool call produces a single semantic object. This reduces token usage, hides legacy complexity, and gives you a place to cache aggressively.

This layer is where architecture matters most.

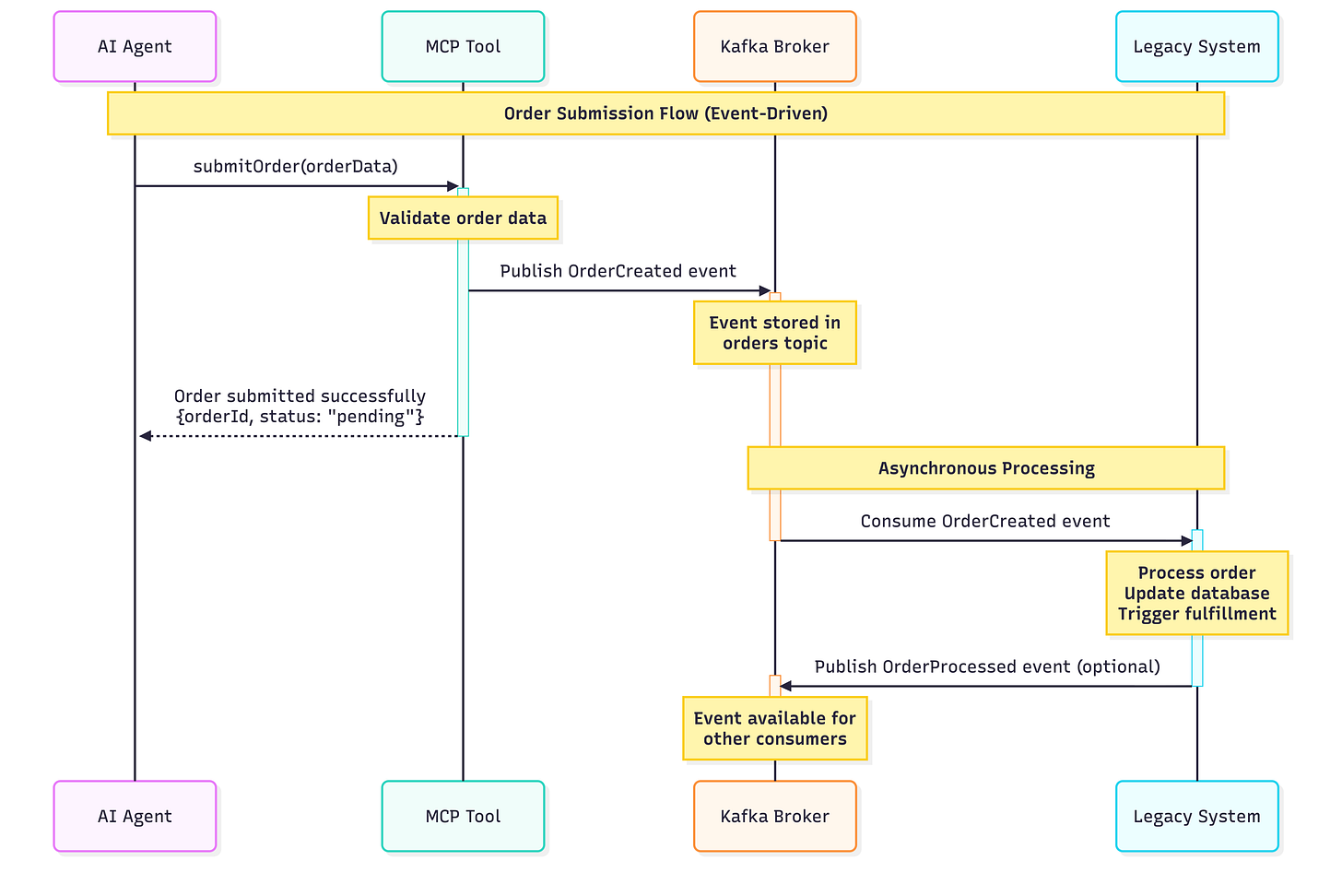

Pattern 2: Event-Driven MCP for Writes

Reads and writes behave differently under AI load. Writes deserve special care.

This pattern:

Decouples MCP timing from legacy processing

Survives legacy downtime

Creates natural audit trails

The trade-off is that request-response semantics disappear. AI systems must accept eventual consistency.

Pattern 3: Database-Level MCP Integration (Read-Only)

Some teams consider bypassing services and reading directly from the database.

This is fast and tempting. It is also dangerous.

It bypasses:

Business rules

Authorization logic

Audit trails

If used, it should be read-only, against replicas, and hidden behind views that encode domain meaning. Anything else becomes technical debt very quickly.

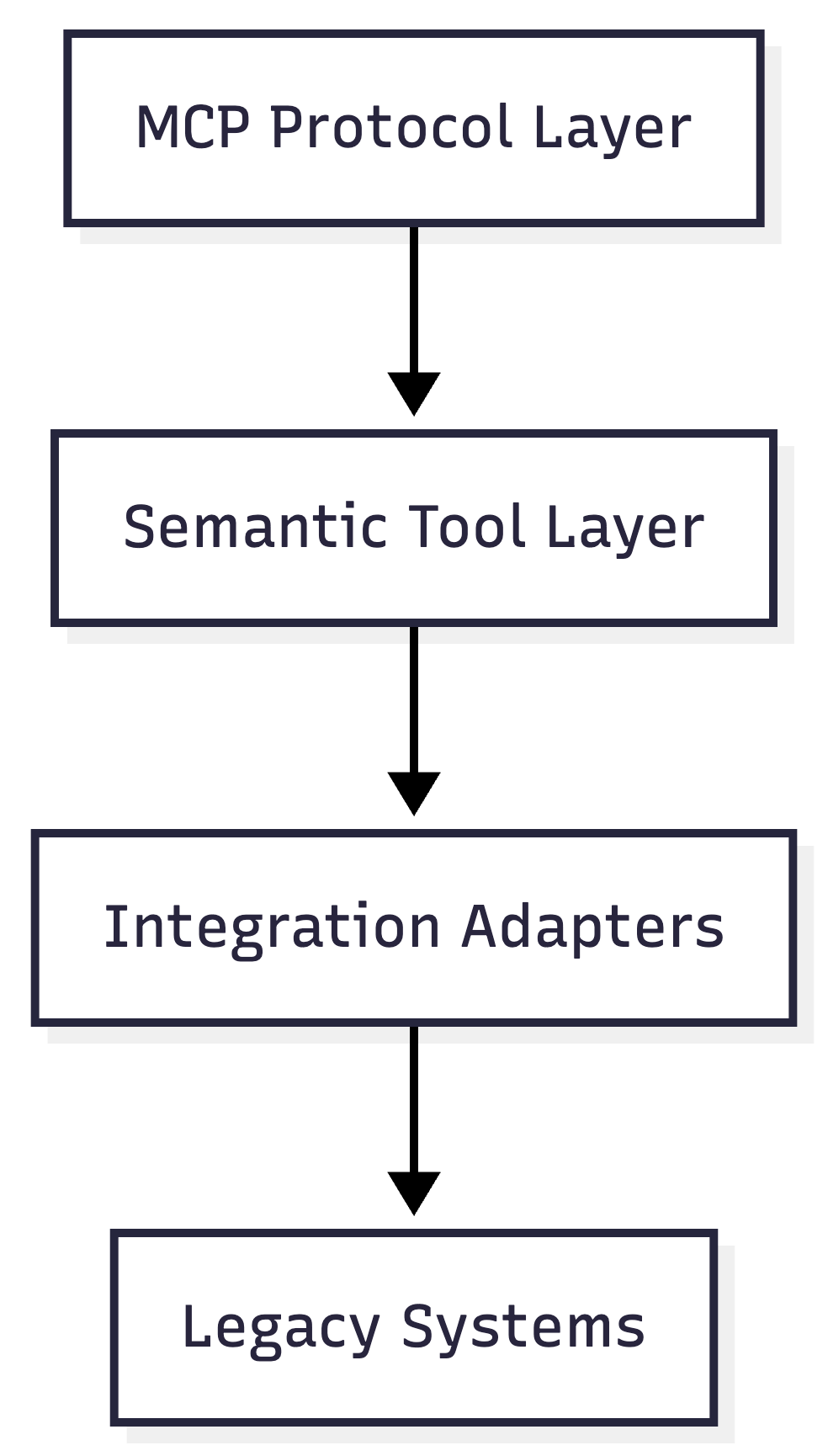

A Recommended Gateway Architecture

Putting these pieces together leads to a layered gateway model.

Where:

The protocol layer speaks MCP and enforces security

The tool layer defines AI-friendly capabilities

The adapter layer handles REST, messaging, and database access

This structure scales because responsibilities are cleanly separated.

But What If the Legacy App Exposes MCP Directly?

At some point, someone asks the uncomfortable question:

Can we just expose MCP endpoints directly from our Jakarta EE application?

Yes. Sometimes.

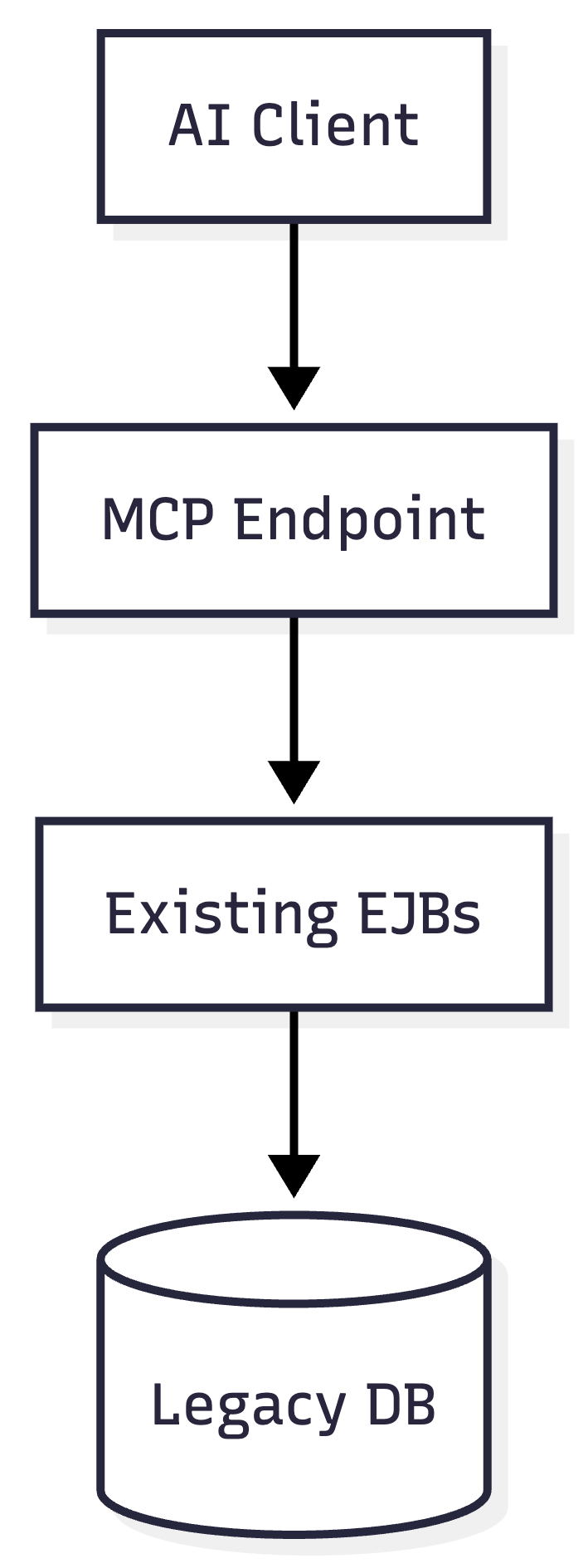

In-Process MCP Server Pattern

With CDI-based MCP support, MCP tools can live next to existing EJBs.

This approach feels elegant. No duplication. No gateway.

Why It Works

Zero business logic duplication

Shared transactions and security context

Direct access to internal APIs never exposed over REST

This is powerful. And risky. Direct exposure couples AI evolution to legacy lifecycle.

AI traffic competes with human traffic

Scaling MCP means scaling the legacy app

Dependency conflicts become likely

Redeploying AI features requires redeploying the core system

Security exposure also increases. AI agents are not humans. Existing role models often do not fit.

This pattern is viable only when:

The app is relatively modern

Traffic volumes are controlled

AI agents are internal and trusted

Time-to-market is more important than long-term flexibility

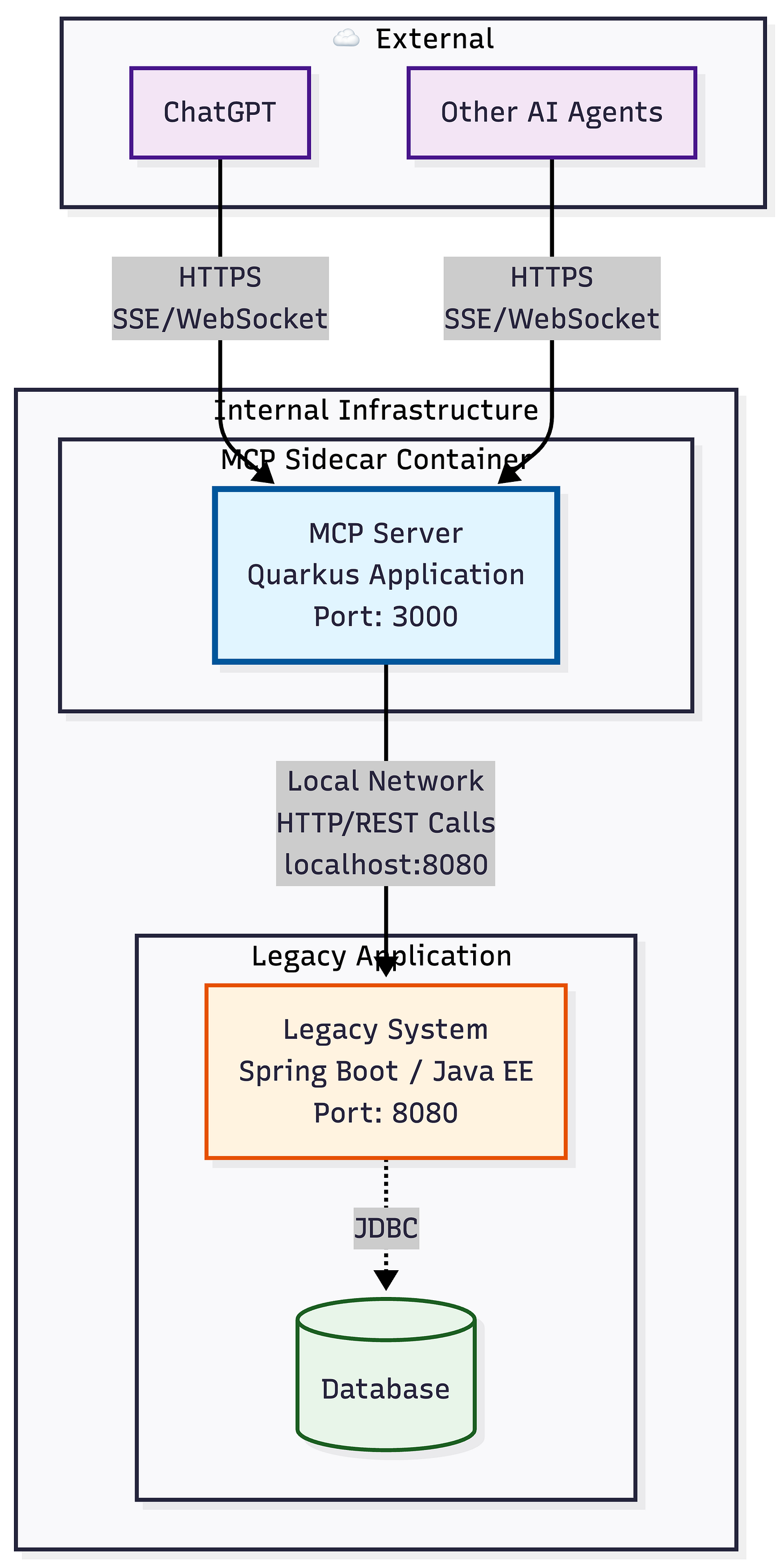

Pattern 4: The MCP Sidecar

A useful compromise is a sidecar MCP service.

The sidecar:

Hosts MCP endpoints

Calls the legacy app locally

Can be updated and scaled separately

Keeps MCP dependencies out of the legacy codebase

This pattern preserves velocity without forcing a big rewrite. For many teams, it is the most pragmatic option.

Security and Scaling Are First-Class Concerns

MCP traffic must be treated as untrusted by default.

Key principles:

Authenticate MCP clients explicitly

Rate-limit per agent

Audit every MCP tool invocation

Use mTLS or JWT between layers

From a scaling perspective:

Assume bursty, non-human traffic

Prefer stateless tools

Externalize conversation memory

Protect legacy systems with bulkheads and circuit breakers

Choosing the Right Pattern

There is no universal answer. There is only architectural fit.

Gateway pattern for safety and scale

Direct exposure for speed and transactional depth

Sidecar for controlled evolution

Event-driven writes for resilience

Database reads only with discipline

Jakarta EE and modern Java runtimes make it possible to start small and evolve without rewriting everything. MCP does not force a single architecture. It forces you to think clearly about boundaries you may have ignored for years.

MCP turns legacy systems into conversational backends.

That is powerful. It is also unforgiving. Architects who succeed here will not be the ones who wire MCP endpoints fastest. They will be the ones who treat AI traffic as a new class of integration, with its own scaling, security, and failure modes.