From CRUD to Co‑Pilots: Will Java Standards Own or Miss the AI‑Infusion Race?

Thoughts grouped around a simple Quarkus‑powered AI debugging assistant. Why Java’s scale, standards, and reliability make it the perfect platform for AI‑driven development.

Enterprise Java developers have spent decades building CRUD applications, focusing on persistence, transactions, and scalable APIs. But as AI rapidly moves from research labs into daily development workflows, a new question comes up. Will the Java ecosystem embrace AI-powered developer experiences, or will it fall behind languages that move faster in integrating AI tools? Java already powers the largest enterprise systems on the planet, handling banking, retail, telecommunications and government workloads where reliability and scale are non‑negotiable. Compared to Python, which dominates data science but lacks the same level of runtime performance, security and standardization, Java offers a stable and battle‑tested foundation. If standards bodies and frameworks move decisively, Java can combine its unmatched enterprise footprint with modern AI capabilities to deliver tools that meet both innovation and production‑grade requirements.

One way to explore this shift is by building a hands-on project that injects AI directly into the developer feedback loop. Instead of treating AI as an add-on feature for end users, we will make it part of the development experience itself.

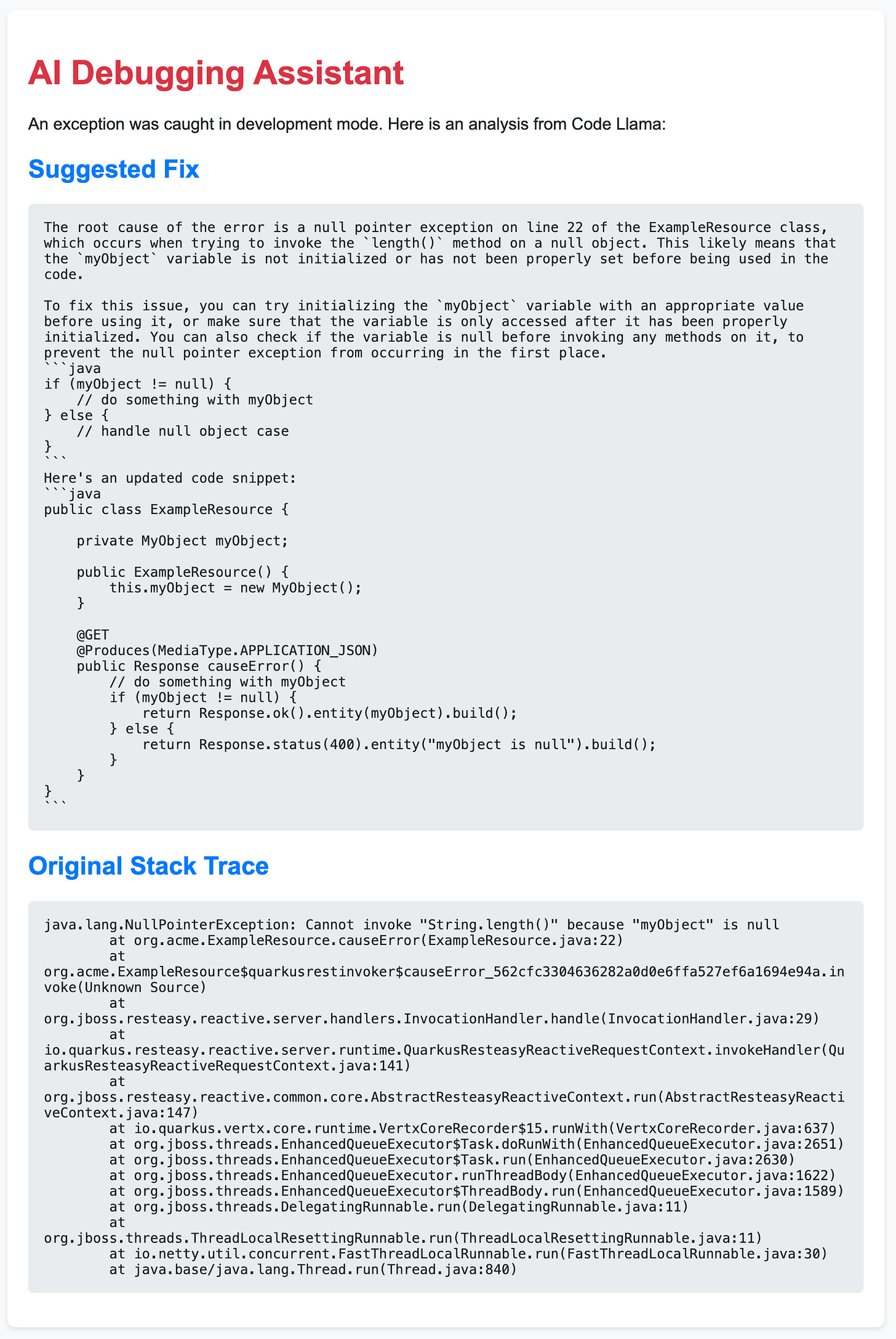

This tutorial shows how to create an AI-powered live debugging assistant using Quarkus, LangChain4j, and a locally running LLM via Ollama. When an exception occurs during development, the assistant intercepts it, analyzes the stack trace with Code Llama, and proposes a fix right inside your browser.

This simple example demonstrates how AI can help developers beyond code completion, creating intelligent co-pilots that reduce friction and accelerate learning.

Prerequisites

You need Java 17 or newer, Apache Maven, and either Ollama natively installed or Podman/Docker as container engine for Quarkus to run a local llama.cpp based container with the model. If you are running native Ollama, you can pull the Code Llama model with:

ollama pull codellama:7b-instructto speed up the initial application start.

Create the Quarkus Project

Generate a Quarkus project with RESTEasy Reactive and LangChain4j Ollama support:

mvn io.quarkus.platform:quarkus-maven-plugin:create \

-DprojectGroupId=org.acme \

-DprojectArtifactId=quarkus-ai-debugger \

-DclassName="org.acme.ExampleResource" \

-Dpath="/hello" \

-Dextensions="rest-jackson,quarkus-langchain4j-ollama"

cd quarkus-ai-debuggerConfigure the Application

Edit src/main/resources/application.properties and add:

quarkus.langchain4j.ollama.chat-model.model-id=codellama:7b-instruct

quarkus.langchain4j.ollama.timeout=120sThis points LangChain4j to the Code Llama model and gives us some more thinking time on slower machines.

Create the AI Service for Error Analysis

Create src/main/java/org/acme/AiErrorAnalyzer.java:

package org.acme;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService

public interface AiErrorAnalyzer {

@SystemMessage("""

You are an expert Java and Quarkus developer. Your task is to analyze a Java stack trace and provide a concise, actionable solution.

1. Identify the root cause of the error.

2. Provide a clear explanation of why the error occurred.

3. Offer a corrected code snippet.

4. Respond only with the explanation and code.

""")

@UserMessage("""

Analyze the following exception and provide a fix.

Exception:

---

{stackTrace}

---

""")

String analyze(String stackTrace);

}LangChain4j generates the implementation at build time. The AI will always receive a structured prompt and reply with an explanation and a suggested code fix.

Create the Qute Template and Filter

First, create a Qute template at src/main/resources/templates/errorAnalysis.html:

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<title>Development Error Assistant</title>

<style>

<!-- omitted for brevity -->

</style>

</head>

<body>

<div class="container">

<h1>🤖 AI Debugging Assistant</h1>

<p>An exception was caught in development mode. Here is an analysis from Code Llama:</p>

<h2>Suggested Fix</h2>

<pre>{analysis}</pre>

<h2>Original Stack Trace</h2>

<pre>{stackTrace}</pre>

</div>

</body>

</html>Now create src/main/java/org/acme/DevelopmentExceptionFilter.java:

package org.acme;

import java.io.PrintWriter;

import java.io.StringWriter;

import io.quarkus.qute.Template;

import io.quarkus.runtime.LaunchMode;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

import jakarta.ws.rs.core.Response;

import jakarta.ws.rs.ext.ExceptionMapper;

import jakarta.ws.rs.ext.Provider;

@Provider

@ApplicationScoped

public class DevelopmentExceptionFilter implements ExceptionMapper<Exception> {

@Inject

AiErrorAnalyzer analyzer;

@Inject

Template errorAnalysis;

@Override

public Response toResponse(Exception exception) {

if (LaunchMode.current() != LaunchMode.DEVELOPMENT) {

// In non-development mode, return a generic error response

return Response.status(Response.Status.INTERNAL_SERVER_ERROR)

.entity("Internal Server Error")

.build();

}

StringWriter sw = new StringWriter();

exception.printStackTrace(new PrintWriter(sw));

String stackTrace = sw.toString();

String analysis = analyzer.analyze(stackTrace);

try {

String html = errorAnalysis.data("analysis", analysis)

.data("stackTrace", stackTrace)

.render();

return Response.status(Response.Status.INTERNAL_SERVER_ERROR)

.entity(html)

.type("text/html; charset=UTF-8")

.build();

} catch (Exception e) {

e.printStackTrace();

return Response.status(Response.Status.INTERNAL_SERVER_ERROR)

.entity("Error processing exception: " + e.getMessage())

.build();

}

}

}

Using an ExceptionMapper instead of a @WebFilter fits naturally with JAX‑RS and Quarkus. In Jakarta EE, filters intercept servlet requests and responses at a lower level, while exception mappers are a JAX‑RS concept that handle exceptions thrown during REST processing and map them to HTTP responses. Quarkus encourages ExceptionMapper for REST APIs because it integrates better with reactive endpoints and avoids manual servlet plumbing. This design is more idiomatic for modern Quarkus applications.

Add a Faulty Endpoint for Testing

Replace the contents of src/main/java/org/acme/ExampleResource.java:

package org.acme;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path("/hello")

public class ExampleResource {

@GET

@Produces(MediaType.TEXT_PLAIN)

public String hello() {

return "Hello, this endpoint works!";

}

@GET

@Path("/error")

@Produces(MediaType.TEXT_PLAIN)

public String causeError() {

String myObject = null;

myObject.length(); // Intentional NullPointerException

return "You will never see this message.";

}

}Run and Test

Start Quarkus in dev mode:

./mvnw quarkus:devNavigate to:

http://localhost:8080/hello/errorYou see a custom error page rendered using Qute. After a few seconds, Code Llama will provide an explanation of the NullPointerException and a possible fix. And yes. This particular demo is super trivial. But I wanted to get you closer to the ideas and opportunities.

This project is a small example of how AI can become a co-pilot in the Java development workflow. If Java standards embrace such integrations, they can define how AI-assisted development evolves. If not, other ecosystems will move faster and set the rules. The opportunity is there, and projects like Quarkus and LangChain4j show that Java is far from out of the race.