Supercharging Java AI: Building MCP Servers and Clients with Quarkus, LangChain4j, and Ollama

Learn how to connect local LLMs to real-world tools using the Model Context Protocol, with a full hands-on Quarkus tutorial for Java developers.

Modern AI applications often need to connect to external systems: fetch live data, apply business rules, or call services outside the model itself. Doing this reliably has been messy. Every framework had its own way of plugging in “tools” for models. The Model Context Protocol (MCP) changes that.

This tutorial shows how to implement both sides of MCP with Quarkus:

An MCP server that exposes a

getTemperatureand afindCityCoordinatestool.An AI client built with LangChain4j and Ollama that consumes the tools and lets the model decide when to call them.

By the end, you’ll have a working setup where a local LLM can answer weather questions by calling your MCP server. Along the way, we’ll cover the major MCP features that turn a simple demo into a production-ready integration point.

What is MCP and why does it matter?

The Model Context Protocol is an open standard that defines how AI apps can discover and call tools, fetch resources, reuse prompts, and exchange messages securely.

Key points about MCP:

Standardized: any MCP-compliant client can talk to any server.

Transport-agnostic: works over STDIO, HTTP/SSE, or Streamable HTTP.

Typed and secure: defines argument schemas, authentication hooks, and event logging.

Think of MCP as the USB-C for AI tools: once supported, servers and clients just work together without custom adapters.

In Quarkus, you get:

The MCP Server extension to expose tools, resources, prompts, notifications, and more.

The LangChain4j MCP client to let your AI services consume tools declaratively with

@McpToolBox.The Ollama extension for running local models with tool-calling support.

We’ll now walk through building a server, a client, and finally explore advanced MCP features.

Architecture overview

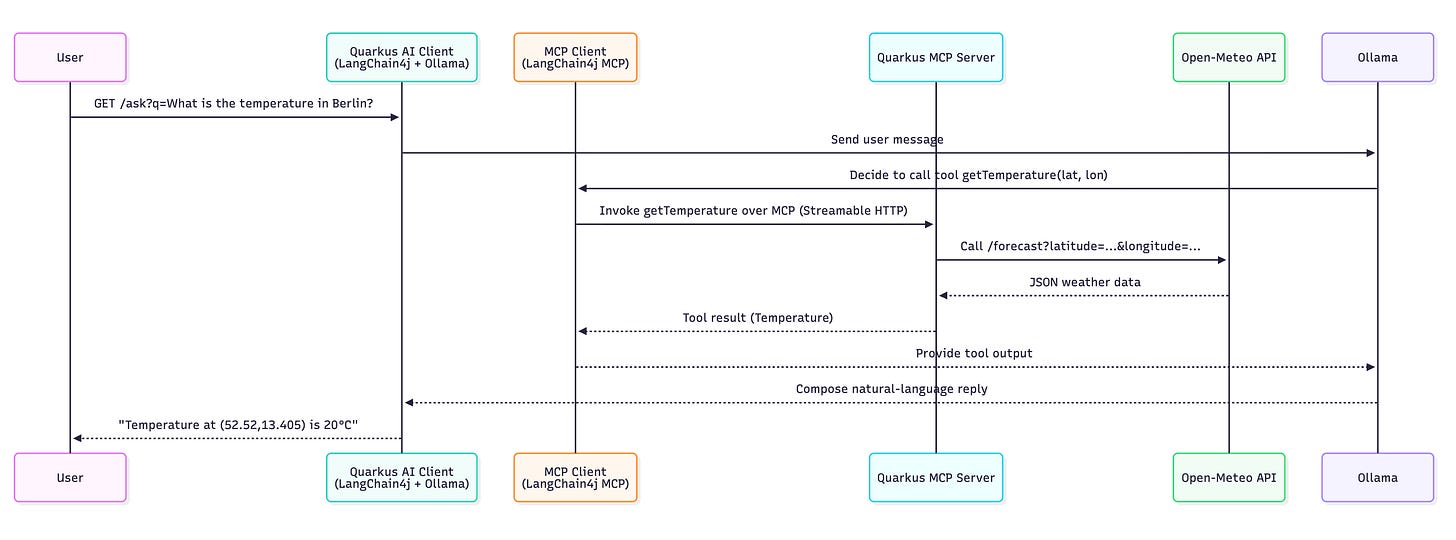

Before we dive into code, let’s visualize the flow. The diagram below shows how the components interact when you ask the client about the temperature in Berlin:

This flow makes clear where MCP fits in:

LangChain4j detects that the model wants to use a tool.

The MCP client calls the server using a standard protocol.

The server implements the tool against a real API.

The model receives the tool output and integrates it into its response.

By keeping the interaction standards-based, you can swap tools, servers, or even LLMs without rewriting glue code.

Prerequisites

JDK 17+

Maven 3.9+

Ollama installed and a small, tool calling capable, model pulled, e.g.

qwen3:1.7b

ollama pull qwen3:1.7bQuarkus Dev Services can also auto-start/pull models for you, but pre-pull is faster the first time.

Optional: MCP Inspector to test servers:

npx @modelcontextprotocol/inspectorConnect with Transport “Streamable HTTP” to

http://localhost:8080/mcp. Make sure to adjust the Quarkus ports if you’re using the inspector.

And as usual, you can find the complete project on my GitHub repository.

Project bootstrap

We’ll create two Quarkus modules:

#Create a folder

mkdir quarkus-mcp

# 2.1 MCP Weather Server (Quarkus MCP Server)

quarkus create app com.acme:mcp-weather-server

cd mcp-weather-server

quarkus ext add quarkus-mcp-server-sse,rest-client-jackson

# 2.2 AI Client (Quarkus + LangChain4j + Ollama + MCP client)

quarkus create app com.acme:ai-mcp-client

cd ai-mcp-client

quarkus ext add quarkus-langchain4j-ollama \

quarkus-langchain4j-mcp \

quarkus-rest-jacksonNotes:

Use

quarkus-mcp-server-sseto expose Streamable HTTP and HTTP/SSE transports. If you want STDIO (e.g., for Claude Desktop), swap inquarkus-mcp-server-stdio.quarkus-langchain4j-mcpbrings in the MCP client for AI services.quarkus-langchain4j-ollamaintegrates a local LLM with tool-calling support.

Make sure to pin Quarkus and extension versions via the generated BOM in each project.

Build the MCP Server (Quarkus)

Let’s start with the server. We’ll expose a single tool getTemperature(latitude, longitude) using the Open-Meteo API. Alongside tool calls, we’ll also log client connections.

Adjust the server configuration

Add the following to the application.properties of the server project:

# Streamable HTTP root

quarkus.mcp.server.sse.root-path=mcp

# Open-Meteo base URL

quarkus.rest-client.open-meteo.url=https://api.open-meteo.com

quarkus.rest-client.open-meteo.scope=jakarta.inject.Singleton

# Open-Meteo Geocoding API base URL

quarkus.rest-client.geocoding.url=https://geocoding-api.open-meteo.com

quarkus.rest-client.geocoding.scope=jakarta.inject.SingletonWeather tool implementation

package com.acme.weather;

import java.time.LocalDateTime;

import org.eclipse.microprofile.rest.client.inject.RestClient;

import io.quarkiverse.mcp.server.McpLog;

// MCP server APIs

import io.quarkiverse.mcp.server.Tool;

import io.quarkiverse.mcp.server.ToolArg;

import io.smallrye.common.annotation.RunOnVirtualThread;

import jakarta.inject.Inject;

import jakarta.inject.Singleton;

@Singleton

public class WeatherTools {

@Inject

@RestClient

WeatherClient client;

@Inject

@RestClient

GeocodingClient geocodingClient;

@Tool(name = "getTemperature", description = "Get temperature in Celsius for a coordinate pair")

@RunOnVirtualThread

public String getTemperature(

@ToolArg(description = "Latitude") double latitude,

@ToolArg(description = "Longitude") double longitude,

McpLog log) {

log.info("Invoking getTemperature lat=%s lon=%s", latitude, longitude);

WeatherResponse resp = client.forecast(latitude, longitude, "temperature_2m");

String out = "Temperature at (%s,%s): %s°C"

.formatted(latitude, longitude, resp.current().temperature_2m());

return out;

}

@Tool(name = "findCityCoordinates", description = "Find latitude and longitude coordinates for a city name")

@RunOnVirtualThread

public String findCityCoordinates(

@ToolArg(description = "City name to search for") String cityName,

McpLog log) {

log.info("Searching for coordinates of city: %s", cityName);

GeocodingResponse response = geocodingClient.search(cityName, 10, "en", "json");

if (response.results().length == 0) {

return "No cities found with name: " + cityName;

}

StringBuilder result = new StringBuilder();

result.append("Found ").append(response.results().length).append(" cities matching '").append(cityName).append("':\n\n");

for (int i = 0; i < Math.min(response.results().length, 5); i++) {

GeocodingResponse.GeocodingResult city = response.results()[i];

result.append(String.format("%d. %s, %s\n",

i + 1,

city.name(),

city.country()));

result.append(String.format(" Coordinates: %.4f, %.4f\n",

city.latitude(),

city.longitude()));

result.append(String.format(" Population: %d\n", city.population()));

result.append(String.format(" Timezone: %s\n\n", city.timezone()));

}

return result.toString();

}

public record WeatherResponse(Current current) {

public record Current(LocalDateTime time, int interval, double temperature_2m) {

}

}

public record GeocodingResponse(GeocodingResult[] results, double generationtime_ms) {

public record GeocodingResult(int id, String name, double latitude, double longitude,

double elevation, String feature_code, String country_code, int admin1_id,

int admin3_id, int admin4_id, String timezone, int population, String[] postcodes,

int country_id, String country, String admin1, String admin3, String admin4) {

}

}

}MCP Weather Server - Provides weather-related tools for Model Context Protocol (MCP) clients through REST API endpoints

Temperature Lookup - Offers a getTemperature tool that fetches current temperature data for specific latitude/longitude coordinates using the Open-Meteo weather API

City Geocoding - Provides a findCityCoordinates tool that searches for cities by name and returns their coordinates, population, timezone, and other details using the Open-Meteo geocoding API

Structured Logging - Integrates with MCP logging system to provide debug information and structured logs for tool invocations and API calls

The class essentially acts as a bridge between MCP clients and weather/geocoding services, exposing two main capabilities: weather data retrieval and city coordinate lookup.

Weather Rest Client implementation

package com.acme.weather;

import org.eclipse.microprofile.rest.client.inject.RegisterRestClient;

import jakarta.ws.rs.DefaultValue;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

@RegisterRestClient(configKey = "open-meteo")

@Path("/v1")

@Produces(MediaType.APPLICATION_JSON)

public interface WeatherClient {

@GET

@Path("/forecast")

WeatherTools.WeatherResponse forecast(@QueryParam("latitude") double lat,

@QueryParam("longitude") double lon,

@QueryParam("current") @DefaultValue("temperature_2m") String current);

}Geocoding REST Client

package com.acme.weather;

import org.eclipse.microprofile.rest.client.inject.RegisterRestClient;

import jakarta.ws.rs.DefaultValue;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

@RegisterRestClient(configKey = "geocoding")

@Path("/v1")

@Produces(MediaType.APPLICATION_JSON)

public interface GeocodingClient {

@GET

@Path("/search")

WeatherTools.GeocodingResponse search(@QueryParam("name") String name,

@QueryParam("count") @DefaultValue("10") int count,

@QueryParam("language") @DefaultValue("en") String language,

@QueryParam("format") @DefaultValue("json") String format);

}

Connection Notifications

Sometimes you want to know when a new MCP client connects. Quarkus MCP Server can fire connection lifecycle notifications that you can observe with CDI. This is useful for auditing, logging, or even initializing per-client resources.

package com.acme.weather;

import io.quarkiverse.mcp.server.McpConnection;

import io.quarkiverse.mcp.server.Notification;

import io.quarkiverse.mcp.server.Notification.Type;

import io.quarkus.logging.Log;

public class ConnectionNotifications {

@Notification(Type.INITIALIZED)

void init(McpConnection connection) {

Log.infof("New client connected: %s", connection.initialRequest().implementation().name());

}

}When a client initializes (for example via MCP Inspector or a LangChain4j MCP client), the server will log its name. This gives you immediate visibility into who is connecting to your MCP server, a small but powerful step toward observability and auditability.

Run the server

# Dev mode

quarkus dev

# Or build a runnable jar

mvn -DskipTests package

java -jar target/quarkus-app/quarkus-run.jar

Verify with MCP Inspector: connect to http://localhost:8080/mcp, list tools, and run getTemperature. You should see progress and structured logs in the inspector UI.

Configuring the MCP Server in your IDE

Many IDEs and MCP-aware tools let you connect directly to MCP servers by adding them to a configuration file. If you want your Quarkus MCP server to be available inside an IDE like VS Code or Claude Desktop, you can point them at the HTTP/SSE endpoint your server exposes.

For our weather server running on port 8081, the config looks like this:

{

"mcpServers": {

"weather-tool": {

"transport": {

"type": "sse",

"url": "http://localhost:8081/mcp/sse"

},

"description": "Weather Tool MCP Server via Server-Sent Events"

}

}

}type: "sse"tells the IDE to connect using Server-Sent Events transport.urlmust point to your Quarkus MCP server’s/mcp/sseendpoint.descriptionis optional but helps you identify the server in the IDE UI.

Once configured, the IDE can list tools, invoke them, and integrate results into your workflow. This makes the Quarkus MCP server feel like a first-class plugin in your development environment without custom adapters.

Build the AI Client (Quarkus + LangChain4j + Ollama + MCP)

We’ll connect to the weather MCP server, expose a simple REST endpoint, and let the LLM decide when to call the tool.

Adjust the client configuration

Configure Ollama, MCP client, and timeouts in the application.properties file:

# --- Ollama chat model ---

quarkus.langchain4j.ollama.chat-model.model-name=qwen3:1.7b

quarkus.langchain4j.ollama.chat-model.temperature=0

quarkus.langchain4j.timeout=60s

# --- MCP client for our weather server over Streamable HTTP ---

quarkus.langchain4j.mcp.weather.transport-type=streamable-http

quarkus.langchain4j.mcp.weather.url=http://localhost:8080/mcp

# Optional logs and tool timeouts

quarkus.langchain4j.mcp.weather.log-requests=true

quarkus.langchain4j.mcp.weather.log-responses=true

quarkus.langchain4j.mcp.weather.tool-execution-timeout=30s

The LangChain4j MCP client in Quarkus is designed for AI services: it connects to MCP servers, discovers tools, and exposes them to LLMs through @McpToolBox. It hides protocol details so developers can focus on prompt design and AI workflows. At the lowest level, the MCP Java SDK is transport-agnostic and framework-neutral; it’s ideal if you need maximum portability or want to embed MCP support outside Quarkus.

AI service with MCP toolbox

package com.acme.client;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import io.quarkiverse.langchain4j.mcp.runtime.McpToolBox;

@RegisterAiService

public interface WeatherAssistant {

@SystemMessage("""

You are a precise travel assistant that helps users get weather information.

When a user asks about weather for a location, follow this two-step process:

1. First, use the findCityCoordinates tool to find the latitude and longitude coordinates for the location

2. Then, use the getTemperature tool with those coordinates to get the current weather data

Always use both tools in sequence - never try to get weather data without first obtaining the coordinates.

Be helpful and provide clear, accurate weather information based on the coordinates.

""")

@McpToolBox("weather") // bind to MCP client named 'weather' from properties

String chat(@UserMessage String user);

}Expose a REST endpoint:

package com.acme.client;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

@Path("/ask")

@Produces(MediaType.TEXT_PLAIN)

public class AskResource {

@Inject

WeatherAssistant assistant;

@GET

public String ask(@QueryParam("q") String q) {

return assistant.chat(q == null ? "What is the temperature in Berlin?" : q);

}

}Run the client

# Terminal 1: run the server

cd ../mcp-weather-server

quarkus dev

# Terminal 2: run the client

cd ../ai-mcp-client

quarkus dev

Test the endpoint on the client:

curl 'http://localhost:8081/ask?q=What%20is%20the%20temperature%20in%20Berlin%3F'You should see a natural-language reply that includes the value from the MCP getTemperature tool. The LLM decides when to call the tool.

<think>

Okay, the user asked for the temperature in Markt Schwaben. First, I needed to find the coordinates. The tool found that Markt Schwaben, Germany is at 48.1895, 11.8691. Then, using the getTemperature function with those coordinates gave 14.4°C. I should present this clearly. Let me check if there's any additional info needed, but the user just asked for the temperature, so a straightforward answer should be best.

</think>

The current temperature in Markt Schwaben, Germany is **14.4°C**.Advanced features: securing and enriching your MCP server

The Quarkus MCP Server extension goes beyond exposing tools. It provides capabilities that make MCP servers secure, observable, and adaptive. Let’s look at five important features.

Security

By default, an MCP server will happily accept any connection. In production, you need to protect it. Quarkus MCP Server integrates with Quarkus Security so you can secure the /mcp and /mcp/sse endpoints with bearer tokens, OIDC, or mTLS.

This ensures that only trusted MCP clients can invoke your tools. Without it, you risk unauthorized access to sensitive data or resource abuse.

Progress API

Long-running operations can leave clients blind without intermediate updates. The Progress API allows a tool method to send progress events (e.g., 25%, 75%, Done).

MCP Inspector can display these updates, giving users feedback that something is happening.

Note: Quarkus MCP Server fully supports the Progress API, but LangChain4j’s MCP integration does not yet surface progress events to the AI service. At the moment, progress is visible only when testing with MCP Inspector or compatible hosts.

Notifications

Sometimes you want the server to inform clients proactively. The Notifications API lets your server broadcast events (e.g., “weather alert for Berlin,” “data source updated”) without waiting for a tool call.

This turns an MCP server into a push-capable integration point. Instead of polling for updates, clients receive real-time signals. For enterprise scenarios—like compliance alerts, fraud detection triggers, or sensor data streams—notifications are essential.

Sampling

AI tools often need to provide representative samples of a resource before the client decides to retrieve full data. The Sampling API lets your MCP server return example records or partial content cheaply.

This avoids expensive full fetches, especially for large datasets. A travel agent might request a sample of current weather readings for a region, and only later request the full dataset. It improves efficiency and prevents unnecessary load.

Elicitation

Not all tool calls arrive with perfect arguments. The Elicitation API allows your MCP server to respond with a structured request for more information.

Example: if the client calls getTemperature without coordinates, your tool can respond: “Please provide latitude and longitude.” The MCP host then loops back to the user or the LLM to refine the input.

This ensures graceful degradation and prevents brittle errors. Instead of throwing exceptions, your tool can guide the conversation, leading to better user experience and fewer dead ends.

Together, these features make your MCP server production-ready: secure against unauthorized use, transparent about long-running work, capable of pushing events, efficient in data exchange, and resilient when arguments are incomplete.

They are the difference between a demo server and an enterprise-grade MCP integration point.

Verification checklist

MCP Inspector: Connect to

http://localhost:8080/mcp, list tools, executegetTemperature, and observe progress logs.MCP logs: Confirm request/response logs in the client console with

quarkus.langchain4j.mcp.weather.log-*enabled. (Quarkiverse Docs)

Thoughts for Production

Transport choice. Prefer Streamable HTTP for cloud deployment. HTTP/SSE remains for compatibility; it’s considered older. (Quarkiverse Docs)

Auth. If your MCP server requires bearer tokens, implement

McpClientAuthProvider(client side) or use the OIDC auth provider to forward user tokens. (Quarkiverse Docs)Time limits. Tune

tool-execution-timeout,resources-timeout, and ping health checks. (Quarkiverse Docs)Observability. MCP client can emit logs; route them to tracing/metrics as needed. (Quarkiverse Docs)

Models. Start with small local models for speed. Pin model names in properties and pre-pull in CI images. (Quarkiverse Docs)

Security. Treat tools as capabilities with least privilege. Externalize secrets. Validate arguments server-side. MCP is gaining OS support rapidly; keep pace with hardening recommendations. (The Verge)

Want to do even more?

Multiple MCP servers. Add more

quarkus.langchain4j.mcp.<name>.*blocks, then use@McpToolBoxwithout a name to expose all. (Quarkiverse Docs)Pinning versions. Keep Quarkus and extensions aligned to a platform BOM; upgrade using the Quarkus update guides and extension pages. (Quarkus)

Native image. Quarkus native works with LangChain4j; verify your HTTP client and JSON reflection configs if needed (beyond scope here). (GitHub)

Claude Desktop / VS Code. Test your server against real hosts via STDIO or HTTP. (Home)

References

MCP spec and site for the protocol, transports, and primitives. (Model Context Protocol)

Quarkus LangChain4j MCP client docs and config reference. (Quarkiverse Docs)

Quarkus MCP Server docs (Streamable HTTP, HTTP/SSE, STDIO, root paths). (Quarkiverse Docs)

Quarkus + Ollama guide and extension page. (Quarkiverse Docs)

Connect your AI to everything, the Quarkus way.