Building a Real-Time AI Progress Tracker with Quarkus, LangChain4j, Ollama, and Granite 4

Turn your LLM pipeline into a transparent, step-by-step experience that shows retrieval, prompt creation, and model calls in real time. Powered by Quarkus, LangChain4j, and Granite4 on Ollama.

I’ve always liked progress bars in software. They are simple, but they do something important. They show you the computer is working and tell you, “Don’t worry, I will be finished soon.” You can see that something is happening.

But with Large Language Models (LLMs), this is a problem I see a lot. You ask a question, and then... nothing. You just wait in silence. Then, suddenly, the full answer appears.

For business software, this is not good enough. Users want to know what the system is doing. They want to see the steps, like: “Okay, now I’m finding the documents,” then “Now I am filtering the information,” and “Now I am calling the LLM to get the final answer.”

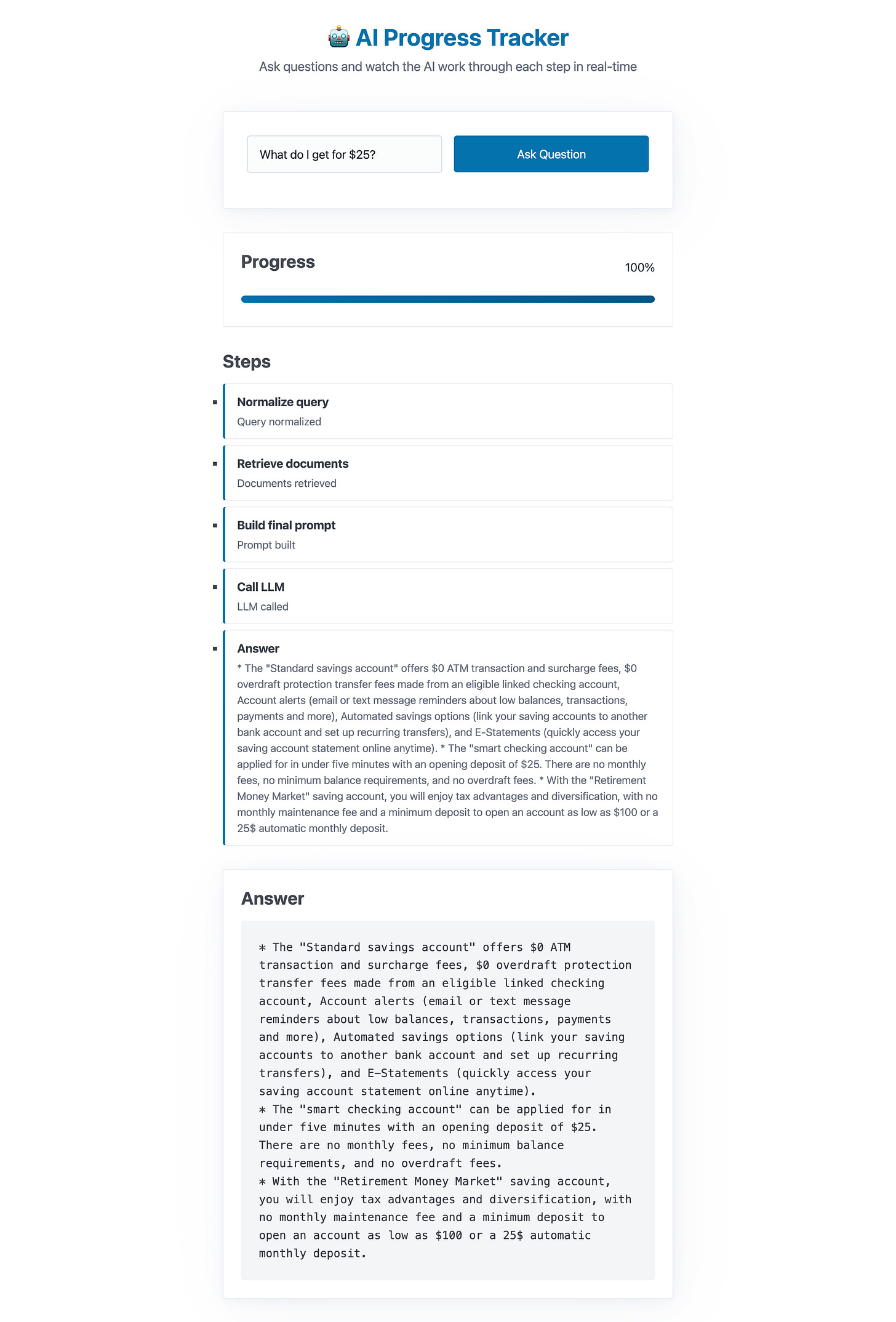

So, in this post, I want to show you how to build exactly that. We will make a real-time progress tracker for an LLM application. We will use Quarkus with LangChain4j, and run a model on our own computer with Ollama.

The idea is very simple. For each step in our code, we will add a small ProgressEvent. The system will then use these events and automatically populate the progress bar for us. The backend will send live updates to the frontend, so the user sees a smooth progress bar.

When we are finished, you will have a complete project you can run. Your LLM application will no longer be a “black box.” Instead, it will be a transparent system that shows your users exactly what it is doing.

Prerequisites

Java 21

Ollama installed and running (if you don’t want the Dev Service to spin up a model container) with local models

Example models:llama3.1:8b(chat) orgranite4:tiny-h

Pull them once:

ollama pull granite4:tiny-hQuarkus CLI or Maven

Bootstrap

quarkus create app com.example:ai-progress-tracker -x=rest-jackson,langchain4j-ollama,quarkus-langchain4j-easy-rag

cd ai-progress-trackerDocs for the extensions we use:

quarkus-rest-jacksonfor JSON REST. Latest artifact isio.quarkus:quarkus-rest-jackson. (Quarkus)quarkus-langchain4j-ollamafor local LLM + embeddings. (Quarkus)quarkus-langchain4j-easy-ragto ingest documents with one property (quarkus.langchain4j.easy-rag.path) and auto-wire a default retrieval augmentor. (Quarkiverse Docs)

And as usual, find the full project in my Github repository.

Configuration

We do need a little bit of configuration for Quarkus.

quarkus.langchain4j.easy-rag.path=easy-rag-catalog

quarkus.langchain4j.easy-rag.reuse-embeddings.enabled=true

quarkus.langchain4j.timeout=60s

# Ollama chat model (local)

quarkus.langchain4j.ollama.chat-model.model-id=granite4:tiny-h

quarkus.langchain4j.ollama.chat-model.temperature=0

quarkus.langchain4j.ollama.log-requests=trueEasy RAG will scan the easy-rag-catalog directory and auto-ingest on startup, wiring a default RetrievalAugmentor to an in-memory store.

Sample docs

Use the provided sample docs or add more to /easy-rag-catalog with a few lines of content your RAG step can retrieve.

Progress model

A simple JSON object for streaming updates:

src/main/java/com/example/progress/ProgressEvent.java

package com.example.progress;

public class ProgressEvent {

public String step;

public int percent;

public String detail; // optional small text payload

public ProgressEvent() {

}

public ProgressEvent(String step, int percent, String detail) {

this.step = step;

this.percent = percent;

this.detail = detail;

}

}The AI service and pipeline

Use LangChain4j’s declarative style to define our assistant:

src/main/java/com/example/ai/Assistant.java

package com.example.ai;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import jakarta.enterprise.context.ApplicationScoped;

@RegisterAiService

@ApplicationScoped

public interface Assistant {

@SystemMessage(”“”

You are a helpful assistant. Use retrieved context when available.

Keep answers concise and cite short bullet points from context when relevant.”“”)

String answer(@UserMessage String question);

}With Easy RAG on the classpath and configured, a default Retrieval Augmentor is provided and wired to an in-memory store, so your service benefits from retrieval automatically.

Pipeline with Progress Callbacks

The core AI pipeline (AiPipeline) breaks down complex operations into discrete, trackable steps:

Step 1: Query Normalization (20%) - Clean and validate user input

Step 2: Document Retrieval (40%) - Fetch relevant context using RAG

Step 3: Prompt Construction (60%) - Build the final LLM prompt

Step 4: LLM Processing (80%) - Execute the language model call

Step 5: Response Composition (100%) - Format and return the answer

Each step triggers a progress callback with structured metadata about the current operation.

src/main/java/com/example/ai/AiPipeline.java

package com.example.ai;

import com.example.progress.ProgressEvent;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class AiPipeline {

@Inject

Assistant assistant;

public interface ProgressCallback {

void onProgress(ProgressEvent event);

}

public String processWithProgress(String question, ProgressCallback callback) {

try {

// Step 1: Normalize query

callback.onProgress(new ProgressEvent(”Normalize query”, 20, “Query normalized”));

String q = normalize(question);

// Step 2: Retrieve documents

callback.onProgress(new ProgressEvent(”Retrieve documents”, 40, “Documents retrieved”));

String preview = retrievePreview(q);

// Step 3: Build final prompt

callback.onProgress(new ProgressEvent(”Build final prompt”, 60, “Prompt built”));

String finalPrompt = buildPrompt(q, preview);

// Step 4: Call LLM

callback.onProgress(new ProgressEvent(”Call LLM”, 80, “LLM called”));

String raw = callLlm(finalPrompt);

String answer = compose(raw);

// Step 5: Complete

callback.onProgress(new ProgressEvent(”Answer”, 100, answer));

return answer;

} catch (Exception e) {

callback.onProgress(new ProgressEvent(”Error”, 0, e.getMessage()));

throw e;

}

}

public String normalize(String q) {

return q == null ? “” : q.trim().replaceAll(”\\s+”, “ “);

}

public String retrievePreview(String q) {

// Ask the model to surface only the top snippets (forces retrieval path)

return assistant.answer(”List 3 short bullets relevant to: “ + q);

}

public String buildPrompt(String q, String preview) {

return “”“

Using the following context, answer clearly:

Question: %s

Context:

%s

“”“.formatted(q, preview);

}

public String callLlm(String finalPrompt) {

return assistant.answer(finalPrompt);

}

public String compose(String raw) {

// Could format markdown, add citations, etc.

return raw;

}

}The functional callback interface decouples the business logic from the progress reporting. This pattern allows the same pipeline to be used in different contexts (web UI, batch processing, testing) with different progress reporting mechanisms.

SSE resource that streams progress and the final answer

The REST API (AskResource) uses Server-Sent Events to stream progress updates to the frontend:

Endpoint: GET /ai/ask/stream?q={question}

Content-Type: text/event-stream

Data Format: JSON-serialized ProgressEvent objects

Error Handling: Graceful fallback with manual JSON construction

src/main/java/com/example/api/AskResource.java

package com.example.api;

import com.example.ai.AiPipeline;

import com.example.progress.ProgressEvent;

import com.fasterxml.jackson.databind.ObjectMapper;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

import jakarta.ws.rs.sse.Sse;

import jakarta.ws.rs.sse.SseEventSink;

@Path(”/ai”)

public class AskResource {

@Inject

AiPipeline pipeline;

@Inject

Sse sse;

@Inject

ObjectMapper objectMapper;

@GET

@Path(”/ask/stream”)

@Produces(MediaType.SERVER_SENT_EVENTS)

public void ask(@QueryParam(”q”) String question, SseEventSink eventSink) {

try {

pipeline.processWithProgress(question, event -> sendEvent(event, eventSink));

eventSink.close();

} catch (Exception e) {

sendErrorEvent(e.getMessage(), eventSink);

eventSink.close();

}

}

private void sendEvent(ProgressEvent event, SseEventSink eventSink) {

try {

String jsonData = objectMapper.writeValueAsString(event);

eventSink.send(sse.newEventBuilder()

.data(jsonData)

.mediaType(MediaType.APPLICATION_JSON_TYPE)

.build());

} catch (Exception e) {

// Fallback to manual JSON construction

sendEventManually(event, eventSink);

}

}

private void sendErrorEvent(String errorMessage, SseEventSink eventSink) {

ProgressEvent errorEvent = new ProgressEvent(”Error”, 0, errorMessage);

sendEvent(errorEvent, eventSink);

}

private void sendEventManually(ProgressEvent event, SseEventSink eventSink) {

try {

String jsonData = String.format(”{\”step\”:\”%s\”,\”percent\”:%d,\”detail\”:\”%s\”}”,

event.step, event.percent, event.detail);

eventSink.send(sse.newEventBuilder()

.data(jsonData)

.mediaType(MediaType.APPLICATION_JSON_TYPE)

.build());

} catch (Exception e) {

// If even manual construction fails, close the sink

eventSink.close();

}

}

}Minimal UI

Let’s just create a quick and minimal UI to display the functionality. Find the css files in the project folder in my Github repository.

src/main/resources/META-INF/resources/index.html

<!doctype html>

<html data-theme=”light”>

<head>

<meta charset=”utf-8” />

<meta name=”viewport” content=”width=device-width, initial-scale=1”>

<title>LLM Progress Tracker</title>

<link rel=”stylesheet” href=”https://cdn.jsdelivr.net/npm/@picocss/pico@2/css/pico.min.css”>

<link rel=”stylesheet” href=”style.css”>

</head>

<body>

<div class=”container”>

<header class=”header”>

<h1>🤖 AI Progress Tracker</h1>

<p>Ask questions and watch the AI work through each step in real-time</p>

</header>

<main>

<div class=”form-container”>

<form id=”f”>

<div class=”grid”>

<div>

<input id=”q” type=”text” placeholder=”e.g. What does this service do? How does it work?”

required />

</div>

<div>

<button type=”submit” role=”button”>Ask Question</button>

</div>

</div>

</form>

</div>

<div class=”progress-container hidden” id=”progressContainer”>

<div class=”progress-header”>

<h3>Progress</h3>

<span id=”pct”>0%</span>

</div>

<div class=”progress-bar”>

<div class=”progress-fill” id=”fill”></div>

</div>

</div>

<div class=”steps-container hidden” id=”stepsContainer”>

<h3>Steps</h3>

<ul class=”steps-list” id=”steps”></ul>

</div>

<div class=”answer-container hidden” id=”answerContainer”>

<h3>Answer</h3>

<div class=”answer-content” id=”answer”></div>

</div>

</main>

</div>

<script>

const f = document.getElementById(’f’);

const q = document.getElementById(’q’);

const fill = document.getElementById(’fill’);

const pct = document.getElementById(’pct’);

const steps = document.getElementById(’steps’);

const answer = document.getElementById(’answer’);

const progressContainer = document.getElementById(’progressContainer’);

const stepsContainer = document.getElementById(’stepsContainer’);

const answerContainer = document.getElementById(’answerContainer’);

function showElement(element) {

element.classList.remove(’hidden’);

element.classList.add(’fade-in’);

}

function hideElement(element) {

element.classList.add(’hidden’);

element.classList.remove(’fade-in’);

}

function resetUI() {

steps.innerHTML = ‘’;

answer.textContent = ‘’;

fill.style.width = ‘0%’;

pct.textContent = ‘0%’;

hideElement(progressContainer);

hideElement(stepsContainer);

hideElement(answerContainer);

}

f.onsubmit = (e) => {

e.preventDefault();

resetUI();

showElement(progressContainer);

showElement(stepsContainer);

const es = new EventSource(`/ai/ask/stream?q=${encodeURIComponent(q.value)}`);

es.onmessage = (event) => {

try {

const data = JSON.parse(event.data);

// Add step to list

const stepItem = document.createElement(’li’);

stepItem.innerHTML = `

<strong>${data.step}</strong>

<div class=”step-detail”>${data.detail}</div>

`;

steps.appendChild(stepItem);

// Update progress

fill.style.width = data.percent + ‘%’;

pct.textContent = data.percent + ‘%’;

// Show answer when complete

if (data.step === ‘Answer’ && data.detail) {

answer.textContent = data.detail;

showElement(answerContainer);

es.close();

}

} catch (err) {

console.error(’Error parsing JSON:’, err, ‘Data:’, event.data);

const errorItem = document.createElement(’li’);

errorItem.innerHTML = `<div class=”error-message”>Error parsing response: ${err.message}</div>`;

steps.appendChild(errorItem);

}

};

es.onerror = () => {

const errorItem = document.createElement(’li’);

errorItem.innerHTML = `<div class=”error-message”>Connection error occurred</div>`;

steps.appendChild(errorItem);

es.close();

};

};

</script>

</body>

</html>Uses PicoCSS for a clean, modern baseline design without writing heavy custom CSS.

Content is wrapped in a centered

.container, making it feel balanced and readable.Light theme (

<html data-theme=”light”>)

Run it

quarkus dev

# open http://localhost:8080Try a question that hits your easy-rag-catalog so that retrieval is visible in the “Retrieve documents” step.

Production notes

Backpressure: SSE handles bursts well, but for high load consider WebSockets.

RAG scaling: Easy RAG uses in-memory by default. Switch to Postgres or Redis for persistence.

Weighted steps: Right now, each step contributes equally. Add a

weightfield if some steps take longer.Security: Don’t leak internal prompts. Scrub

detailbefore streaming to users.

What this unlocks

You can reuse the annotation approach for any multi-step workflow: CSV importers, video transcoders, CI log streams.

For more control, add

weight()to@ProgressStepand compute weighted percentages.Add a

jobIdand aProgressBusif you want multi-tab or multi-user subscriptions.

Progress bars may seem like small UX details, but in AI-driven applications they serve a much deeper purpose. They transform opaque model calls into a transparent process, helping users build trust and giving developers a way to surface exactly what happens behind the scenes. With Quarkus, LangChain4j, Ollama, and Granite 4, you can build pipelines that aren’t just powerful, but explainable and auditable.

This tutorial showed you how to stream progress step by step, from retrieval to the final answer, with a clean UI and real-time updates. The same pattern applies to many other workflows: batch jobs, data pipelines, or long-running transactions. Once you start making progress visible, you’ll find users expect it everywhere. And in today’s enterprise landscape, visibility is as important as speed.

Sir could you please share one thread for embedding process and saving the data into vectordb using quarkus. I am building artifacts management but I have to switch to js echosystem only for embedding .