Building a Museum App with Quarkus, LangChain4j, and MCP

How Java developers can combine vision AI, real-time context, and modern Quarkus features to create privacy-aware, engaging applications.

Imagine you’re visiting a museum. You snap a photo of an artwork and instantly get details about it, enriched with current context. You share how the piece made you feel with a selfie and a quick note, and later you check a live dashboard showing how others felt about the same artwork.

This isn’t science fiction, it’s a concrete example of combining Quarkus, LangChain4j, and the Quarkus Model Context Protocol (MCP) Server. Together, they make it straightforward to build AI-driven, privacy-aware, mobile-friendly applications in Java.

It shows three things at once:

Ease of development with Quarkus. Scaffold, run, and persist data without boilerplate.

LangChain4j integration. Call multimodal models from plain Java interfaces.

MCP (Model Context Protocol). Safely bring external context (time, news, knowledge) into AI workflows.

This is not just a coding exercise. It mirrors the real-world challenges enterprises face when building AI-infused applications: privacy, modularity, and context.

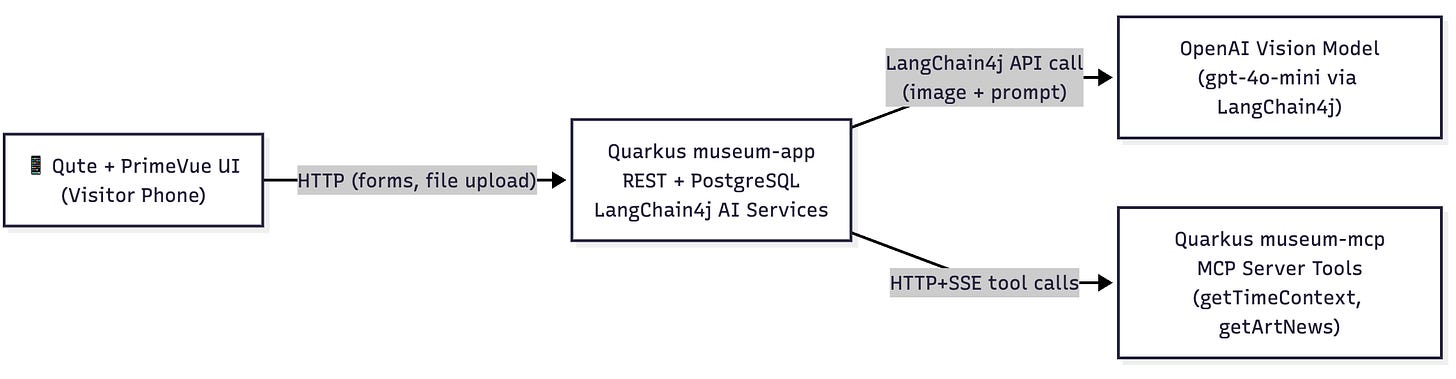

The system has two Quarkus services:

museum-app

Runs the visitor-facing application with Qute + PrimeVue UI.

Handles REST endpoints, stores sentiment in PostgreSQL, and talks to OpenAI via LangChain4j.museum-mcp

Exposes external tools over MCP.

ImplementsgetTimeContextandgetArtNews, making it easy for the AI to reason with current date/time and live information from Wikipedia.

The UI → App → AI → MCP flow demonstrates how each layer fits together. The visitor interacts through a lightweight mobile web page, but the model behind the scenes uses MCP to pull in additional facts.

The reason for splitting into two projects is architectural clarity. The app can evolve independently of the tool providers. In real-world enterprise setups, you’ll often want to modularize external knowledge sources like this.

Development experience

This is where Quarkus shines. Instead of manually configuring databases, drivers, and setup scripts, we rely on Dev Services. Simply declare PostgreSQL as a dependency and Quarkus spins up a container on the fly when you run in dev mode.

No external database to install. No credentials to juggle. Just type mvn quarkus:dev and you’re running against a fresh database.

This dramatically lowers the barrier for experimentation and prototyping. Exactly what you want in AI-infused applications that iterate quickly.

Prerequisites

JDK 21

Maven 3.9+

Podman with rootless containers (for Dev Services PostgreSQL)

An

OPENAI_API_KEYin your environment

Bootstrap projects

You can follow this post step by step, but it’s also up on my Github Repository in case you want to just go there.

The quarkus-maven-plugin scaffolds both apps in seconds.

With Dev Services, PostgreSQL runs automatically in a Podman container, so no manual database setup is required.

mkdir museum && cd museum

# museum-app

mvn io.quarkus.platform:quarkus-maven-plugin:3.26.4:create \

-DprojectGroupId=com.example \

-DprojectArtifactId=museum-app \

-DclassName="com.ibm.txc.museum.ArtResource" \

-Dpath="/api/art" \

-Dextensions="rest-jackson,qute,hibernate-orm-panache,jdbc-postgresql,smallrye-health,quarkus-langchain4j-openai,quarkus-langchain4j-mcp,quarkus-qute-web"

# museum-mcp

mvn io.quarkus.platform:quarkus-maven-plugin:3.26.4:create \

-DprojectGroupId=com.example \

-DprojectArtifactId=museum-mcp \

-DclassName="com.ibm.txc.museum.mcp.MuseumMcpServer" \

-Dextensions="rest-jackson,quarkus-mcp-server-sse"

4. Configure

museum-app/src/main/resources/application.properties

qquarkus.http.port=8080

quarkus.default-locale=en

quarkus.qute.remove-standalone-lines=true

# DB via Dev Services

quarkus.hibernate-orm.schema-management.strategy=drop-and-create

quarkus.datasource.db-kind=postgresql

# OpenAI

quarkus.langchain4j.openai.api-key=${OPENAI_API_KEY}

quarkus.langchain4j.openai.chat-model.model-name=gpt-4.1-mini

quarkus.langchain4j.timeout=30S

#quarkus.langchain4j.openai.chat-model.log-requests=true

quarkus.langchain4j.openai.chat-model.log-responses=true

# MCP server endpoint

quarkus.langchain4j.mcp.museum.transport-type=streamable-http

quarkus.langchain4j.mcp.museum.url=http://localhost:8081/mcp

quarkus.langchain4j.mcp.museum.log-requests=true

quarkus.langchain4j.mcp.museum.log-responses=truemuseum-mcp/src/main/resources/application.properties

quarkus.http.port=8081Data model (museum-app)

Our domain is intentionally simple: Artworks and Sentiment Votes.

Artworks are seeded at startup. Each has a unique code, title, artist, year, description, and optional image URL. We seeded four famous paintings that everyone recognizes.

Sentiment Votes represent visitors’ impressions. Importantly, we do not store selfies or text impressions. We only persist the artwork reference, the classified sentiment label, the timestamp, and the IP address.

This design choice demonstrates privacy by default. Visitors contribute to the collective experience without risking personal exposure.

Art.java

package com.ibm.txc.museum.domain;

import io.quarkus.hibernate.orm.panache.PanacheEntity;

import jakarta.persistence.Column;

import jakarta.persistence.Entity;

@Entity

public class Art extends PanacheEntity {

@Column(nullable = false, unique = true)

public String code;

@Column(nullable = false)

public String title;

@Column(nullable = false)

public String artist;

@Column(nullable = false)

public Integer year;

public String imageUrl;

public String description;

}SentimentVote.java

package com.ibm.txc.museum.domain;

import java.time.OffsetDateTime;

import io.quarkus.hibernate.orm.panache.PanacheEntity;

import jakarta.persistence.Column;

import jakarta.persistence.Entity;

import jakarta.persistence.ManyToOne;

@Entity

public class SentimentVote extends PanacheEntity {

@ManyToOne(optional = false)

public Art art;

@Column(nullable = false)

public String label;

@Column(nullable = false)

public OffsetDateTime createdAt;

@Column(nullable = false)

public String ip;

}Add Seed Data

Seeds four famous artworks in insert.sql

INSERT INTO Art (id, code, title, artist, year, imageUrl, description) VALUES

(1, 'ART-0001', 'Starry Night', 'Vincent van Gogh', 1889, 'https://upload.wikimedia.org/wikipedia/commons/e/ea/The_Starry_Night.jpg', 'Post-Impressionist masterpiece with swirling night sky.');

INSERT INTO Art (id, code, title, artist, year, imageUrl, description) VALUES

(2, 'ART-0002', 'Mona Lisa', 'Leonardo da Vinci', 1503, 'https://upload.wikimedia.org/wikipedia/commons/6/6a/Mona_Lisa.jpg', 'Portrait renowned for enigmatic expression.');

INSERT INTO Art (id, code, title, artist, year, imageUrl, description) VALUES

(3, 'ART-0003', 'The Persistence of Memory', 'Salvador Dalí', 1931, 'https://upload.wikimedia.org/wikipedia/en/d/dd/The_Persistence_of_Memory.jpg', 'Surreal landscape with melting clocks.');

INSERT INTO Art (id, code, title, artist, year, imageUrl, description) VALUES

(4, 'ART-0004', 'Girl with a Pearl Earring', 'Johannes Vermeer', 1665, 'https://upload.wikimedia.org/wikipedia/commons/d/d7/Meisje_met_de_parel.jpg', 'Tronie with luminous light and gaze.');LangChain4j AI Services

Now comes the fun part: wiring AI into a Java app. LangChain4j makes this almost too easy.

Instead of writing REST clients or parsing JSON responses manually, we define annotated Java interfaces:

ArtInspectorAi: Takes an image, plus extra context (time and latest news), and returns structured JSON identifying the artwork.

SentimentAi: Takes a selfie and a short impression, and returns one of five sentiment labels.

With just these interfaces, Quarkus and LangChain4j generate the necessary plumbing. When we call ai.identify(...) or ai.classify(...), the framework sends the data to the OpenAI Vision model, applies our prompt instructions, and delivers back a clean Java String result.

It’s declarative, type-safe, and requires no boilerplate.

ArtInspectorAi.java

package com.ibm.txc.museum.ai;

import dev.langchain4j.data.image.Image;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import io.quarkiverse.langchain4j.mcp.runtime.McpToolBox;

@RegisterAiService

public interface ArtInspectorAi {

@SystemMessage("""

Identify artwork from a visitor photo.

Return JSON with: title, artist, confidence, why, today.

""")

@UserMessage("""

Photo: {{image}}

Use MCP tool getTimeContext to put into a time context.

Use MCP tool getArtNews Latest updates about the artwork.

""")

@McpToolBox("museum")

String identify(Image image);

}SentimentAi.java

package com.ibm.txc.museum.ai;

import dev.langchain4j.data.image.Image;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService

public interface SentimentAi {

@SystemMessage("""

Classify selfie + text impression into:

very_negative, negative, neutral, positive, very_positive.

Only return the label.

""")

@UserMessage("""

Selfie: {{image}}

Impression: "{{impression}}"

""")

String classify(Image image, String impression);

}MCP Server (museum-mcp)

The second service (museum-mcp) defines tools with @Tool annotations:

getTimeContext: packages the current ISO timestamp, year, date, and weekday.

getArtNews: queries Wikipedia for the latest summary about an artwork.

The server automatically generates the MCP manifest and exposes the tools over HTTP+SSE.

This means any MCP-aware client — in this case, LangChain4j — can call the tools without custom plumbing.

This separation of concerns shows how context providers can be modularized.

Enterprises can add their own tools, from SAP, Salesforce, or internal APIs, without touching the core app.

MuseumMcpServer.java

package com.ibm.txc.museum.mcp;

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.time.OffsetDateTime;

import java.time.format.DateTimeFormatter;

import io.quarkiverse.mcp.server.Tool;

import jakarta.inject.Singleton;

@Singleton

public class MuseumMcpServer {

@Tool(name = "getTimeContext", description = "Return current time context")

public TimeContext getTimeContext() {

var now = OffsetDateTime.now();

return new TimeContext(

now.toString(),

now.getYear(),

now.format(DateTimeFormatter.ISO_LOCAL_DATE),

now.getDayOfWeek().toString());

}

@Tool(name = "getArtNews", description = "Fetch latest updates from Wikipedia")

public News getArtNews(String title) {

if (title == null || title.isBlank())

return new News("No title provided");

try {

HttpClient http = HttpClient.newHttpClient();

// Use the more reliable MediaWiki API instead of REST API

String url = "https://en.wikipedia.org/w/api.php?action=query&format=json&prop=extracts&exintro=true&explaintext=true&titles="

+

java.net.URLEncoder.encode(title, java.nio.charset.StandardCharsets.UTF_8);

HttpResponse<String> resp = http.send(

HttpRequest.newBuilder(URI.create(url))

.header("User-Agent", "MuseumMCP/1.0 (Educational Project; markus@jboss.org)")

.GET()

.build(),

HttpResponse.BodyHandlers.ofString());

if (resp.statusCode() == 200) {

return new News(resp.body());

} else {

return new News("Error: HTTP " + resp.statusCode() + " - " + resp.body());

}

} catch (Exception e) {

return new News("Error: " + e.getMessage());

}

}

public record TimeContext(String iso, int year, String today, String weekday) {

}

public record News(String latestUpdates) {

}

}REST Endpoints (museum-app)

The app exposes clean REST resources:

ArtVisionResource. Receives an uploaded photo, encodes it, and passes it to

ArtInspectorAi. The AI in turn uses MCP tools to enrich the answer.SentimentResource. Receives a selfie + impression, calls

SentimentAi, and persists the label. Consent and IP logging are built in.AnalyticsResource. Aggregates votes per artwork and returns a simple JSON summary.

ArtResource. Exposes the seeded artworks to the UI.

These endpoints demonstrate Quarkus’ lightweight REST layer and its integration with Panache ORM for persistence.

package com.ibm.txc.museum.vision;

import java.nio.file.Files;

import java.util.Base64;

import org.jboss.resteasy.reactive.multipart.FileUpload;

import com.ibm.txc.museum.ai.ArtInspectorAi;

import dev.langchain4j.data.image.Image;

import io.quarkus.logging.Log;

import jakarta.inject.Inject;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.FormParam;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import jakarta.ws.rs.core.Response;

@Path("/api/vision")

public class ArtVisionResource {

@Inject

ArtInspectorAi ai;

@POST

@Path("/describe")

@Consumes(MediaType.MULTIPART_FORM_DATA)

@Produces(MediaType.APPLICATION_JSON)

public Response describe(@FormParam("photo") FileUpload upload) {

try {

byte[] bytes = Files.readAllBytes(upload.uploadedFile());

// Build a data URL for the LLM (do not persist)

String b64 = Base64.getEncoder().encodeToString(bytes);

Image img = Image.builder()

.base64Data(b64)

.mimeType("image/jpeg")

.build();

String json = ai.identify(img);

return Response.ok(json).build();

} catch (Exception e) {

Log.error("Error describing image", e);

return Response.status(400).entity("{\"error\":\"bad_image\"}").build();

}

}

}SentimentResource: selfie + impression → SentimentAi → persist.

package com.ibm.txc.museum.sentiment;

import java.nio.file.Files;

import java.time.OffsetDateTime;

import java.util.Base64;

import org.jboss.resteasy.reactive.multipart.FileUpload;

import com.ibm.txc.museum.ai.SentimentAi;

import com.ibm.txc.museum.domain.Art;

import com.ibm.txc.museum.domain.SentimentVote;

import dev.langchain4j.data.image.Image;

import jakarta.inject.Inject;

import jakarta.transaction.Transactional;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.FormParam;

import jakarta.ws.rs.HeaderParam;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.PathParam;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import jakarta.ws.rs.core.Response;

@Path("/api/sentiment")

public class SentimentResource {

@Inject

SentimentAi ai;

@POST

@Path("/{code}")

@Consumes(MediaType.MULTIPART_FORM_DATA)

@Produces(MediaType.APPLICATION_JSON)

@Transactional

public Response vote(@PathParam("code") String code,

@FormParam("selfie") FileUpload upload,

@FormParam("impression") String impression,

@HeaderParam("X-Forwarded-For") String xff,

@HeaderParam("X-Real-IP") String xreal,

@HeaderParam("User-Agent") String ua,

@HeaderParam("Host") String host,

@HeaderParam("Referer") String ref) {

Art art = Art.find("code", code).firstResult();

if (art == null)

return Response.status(404).build();

String clientIp = pickIp(xff, xreal);

try {

byte[] bytes = Files.readAllBytes(upload.uploadedFile());

String b64 = Base64.getEncoder().encodeToString(bytes);

Image img = Image.builder()

.base64Data(b64)

.mimeType("image/jpeg")

.build();

String label = ai.classify(img, impression == null ? "" : impression);

SentimentVote v = new SentimentVote();

v.art = art;

v.label = label.trim();

v.createdAt = OffsetDateTime.now();

v.ip = clientIp == null ? "unknown" : clientIp;

v.persist();

return Response.ok("{\"ok\":true,\"label\":\"" + v.label + "\"}").build();

} catch (Exception e) {

return Response.status(400).entity("{\"error\":\"bad_image_or_model\"}").build();

}

}

private String pickIp(String xff, String xreal) {

if (xreal != null && !xreal.isBlank())

return xreal;

if (xff != null && !xff.isBlank())

return xff.split(",")[0].trim();

return null;

}

}AnalyticsResource: counts per sentiment label.

package com.ibm.txc.museum.sentiment;

import java.util.LinkedHashMap;

import java.util.List;

import java.util.Map;

import com.ibm.txc.museum.domain.Art;

import com.ibm.txc.museum.domain.SentimentVote;

import io.quarkus.hibernate.orm.panache.PanacheQuery;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path("/api/analytics")

@Produces(MediaType.APPLICATION_JSON)

public class AnalyticsResource {

@GET

@Path("/summary")

public Map<String, Object> summary() {

Map<String, Object> out = new LinkedHashMap<>();

List<Art> arts = Art.listAll();

for (Art a : arts) {

Map<String, Long> counts = new LinkedHashMap<>();

for (String label : List.of("very_negative", "negative", "neutral", "positive", "very_positive")) {

counts.put(label, count(a, label));

}

out.put(a.code, counts);

}

return out;

}

private long count(Art a, String label) {

PanacheQuery<SentimentVote> q = SentimentVote.find("art=?1 and label=?2", a, label);

return q.count();

}

}ArtResource: expose seeded artworks.

package com.ibm.txc.museum;

import java.util.List;

import com.ibm.txc.museum.domain.Art;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path("/api/art")

@Produces(MediaType.APPLICATION_JSON)

public class ArtResource {

@GET

public List<Art> list() {

return Art.listAll();

}

}Qute UI with PrimeVue (CDN)

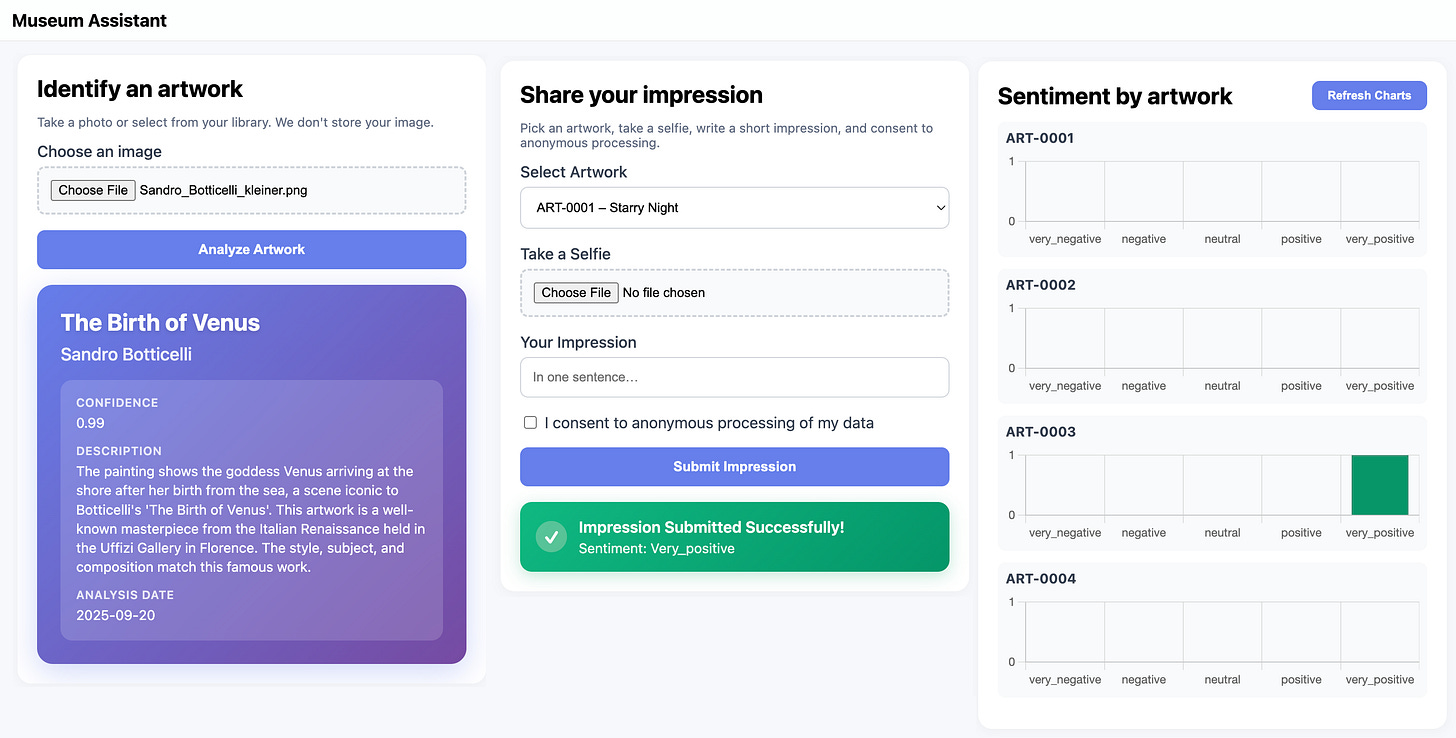

The front end is deliberately not an SPA.

We use Qute templates and CDN-based PrimeVue components to keep it simple and phone-friendly:

Identify Artwork card lets visitors upload/take a photo and shows structured results.

Share Impression card allows selfie + text submission with a consent checkbox.

Charts card visualizes sentiment per artwork with PrimeVue’s Chart component (Chart.js under the hood).

This choice underscores a lesson: you don’t need a heavy SPA to build modern, reactive UIs.

Server-rendered templates with progressive enhancement are often faster, cheaper, and easier to secure.

_layout.html: phone-sized responsive layout, includes PrimeVue and Chart.js.

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8"/>

<meta name="viewport" content="width=device-width, initial-scale=1"/>

<title>{#insert title}Museum{/insert}</title>

<link rel="icon" href="data:,">

<style>

<!-- omitted. Check repository -->

</style>

<!-- Vue + PrimeVue CDN + theme + Chart.js -->

<script src="https://unpkg.com/vue@3/dist/vue.global.js"></script>

<script src="https://unpkg.com/primevue@latest/primevue.min.js"></script>

<script src="https://unpkg.com/@primeuix/themes/umd/aura.js"></script>

<script src="https://cdn.jsdelivr.net/npm/chart.js"></script>

</head>

<body>

<header><h1>{#insert title}Museum{/insert}</h1></header>

<div class="container">

{#insert body}{/insert}

</div>

<footer>Built with Quarkus + Qute + PrimeVue</footer>

</body>

</html>index.html: three cards (Identify artwork, Share impression, Charts).

{#include _layout}

{#title}Museum Assistant{/title}

{#body}

<div class="card">

<h2 style="margin:0 0 6px 0;">Identify an artwork</h2>

<p class="muted">Take a photo or select from your library. We don't store your image.</p>

<form id="identifyForm" enctype="multipart/form-data">

<div class="form-group">

<label for="photo">Choose an image</label>

<input type="file" id="photo" name="photo" accept="image/*" capture="environment" required />

</div>

<button type="submit" class="btn" style="width: 100%;">Analyze Artwork</button>

</form>

<!-- Loading state -->

<div id="loadingState" class="loading" style="display: none;">

<div class="spinner"></div>

<span>Analyzing your artwork...</span>

</div>

<!-- Beautiful result display -->

<div id="resultCard" class="result-card">

<div class="result-title" id="resultTitle"></div>

<div class="result-artist" id="resultArtist"></div>

<div class="result-details">

<div class="result-detail-item">

<div class="result-detail-label">Confidence</div>

<div class="result-detail-value" id="resultConfidence"></div>

</div>

<div class="result-detail-item">

<div class="result-detail-label">Description</div>

<div class="result-detail-value" id="resultDescription"></div>

</div>

<div class="result-detail-item">

<div class="result-detail-label">Analysis Date</div>

<div class="result-detail-value" id="resultDate"></div>

</div>

</div>

</div>

</div>

<div class="card">

<h2 style="margin:0 0 6px 0;">Share your impression</h2>

<p class="muted">

Pick an artwork, take a selfie, write a short impression, and consent to anonymous processing.

</p>

<form id="sentForm" enctype="multipart/form-data">

<div class="form-group">

<label for="code">Select Artwork</label>

<select id="code" name="code" required style="width: 100%; padding: 12px; border: 1px solid #d1d5db; border-radius: 8px; background: white;">

{#for a in cdi:visionUi.list}

<option value="{a.code}">{a.code} – {a.title}</option>

{/for}

</select>

</div>

<div class="form-group">

<label for="selfie">Take a Selfie</label>

<input type="file" id="selfie" name="selfie" accept="image/*" capture="user" required />

</div>

<div class="form-group">

<label for="impression">Your Impression</label>

<input type="text" id="impression" name="impression" placeholder="In one sentence…" style="width: 100%; padding: 12px; border: 1px solid #d1d5db; border-radius: 8px;" />

</div>

<div class="form-group">

<label style="display: flex; align-items: center; font-weight: normal;">

<input type="checkbox" name="consent" required style="margin-right: 8px;" />

I consent to anonymous processing of my data

</label>

</div>

<button type="submit" class="btn" style="width: 100%;">Submit Impression</button>

</form>

<!-- Beautiful sentiment result display -->

<div id="sentResult" class="sentiment-result" style="display: none;">

<div class="sentiment-success">

<div class="sentiment-icon">✓</div>

<div class="sentiment-content">

<div class="sentiment-title">Impression Submitted Successfully!</div>

<div class="sentiment-label" id="sentimentLabel"></div>

</div>

</div>

</div>

</div>

<div id="charts" class="card">

<div style="display: flex; justify-content: space-between; align-items: center; margin-bottom: 12px;">

<h2 style="margin:0;">Sentiment by artwork</h2>

<button onclick="loadCharts()" class="btn" style="padding: 8px 16px; font-size: 12px;">Refresh Charts</button>

</div>

<div id="chartApp">

<div v-for="(data, code) in chartDataByCode" :key="code" style="margin:12px 0;">

<h3 style="margin:0 0 6px 0;">{{ code }}</h3>

<canvas :id="'chart-' + code" width="400" height="100"></canvas>

</div>

</div>

</div>

<script>

// Skipped here. Check repository for details.

</script>

{/body}

{/include}

JS progressively enhances forms; charts use PrimeVue Chart (wrapping Chart.js).

Run locally

It’s as simple as:

Start MCP server:

cd museum-mcp

mvn quarkus:devStart app:

cd museum-app

export OPENAI_API_KEY=sk-...

mvn quarkus:devThe MCP server runs on port 8081.

The app runs on port 8080 and connects automatically to PostgreSQL (via Dev Services).

Within seconds you can open the app in your browser or phone and try the three workflows.

Quarkus Dev Services will start PostgreSQL automatically.

Verify workflows

Identify: Upload a famous painting → AI responds with title, artist, confidence, and “today” context enriched from MCP.

Impression: Select artwork, take selfie, add one sentence → AI classifies it as a sentiment label and stores it anonymously.

Charts: Refresh the sentiment dashboard → see aggregated community reactions.

This shows the full loop: user input → AI reasoning → tool augmentation → persistent, anonymous outcome → visualization.

Production notes

This demo is production-inspired. We highlight:

Privacy: images are never stored; only minimal metadata is logged.

Resilience: external calls (OpenAI, Wikipedia) should be retried or cached.

Scalability: MCP tools can be distributed across services.

Replaceability: OpenAI can be swapped with a local LLM provider without changing code structure.

These are exactly the trade-offs architects face in regulated industries.

The big picture

This demo illustrates:

Quarkus developer joy: scaffold, code, run, iterate.

LangChain4j simplicity: AI services as interfaces, not plumbing.

MCP extensibility: tools as first-class citizens, enabling contextual AI.

For Java developers, it’s a glimpse into how enterprise apps can evolve into AI-infused systems while keeping maintainability, privacy, and standards in mind.

That's a very interesting AI assistants and MCP application. I'm looking for real world examples of Langchain4j and, unfortunatelly, there aren't many. Most of the ones that the web abounds are just pretexts to contributors to demonstrate their commitment in the AI field.

But this application reminds me a project, 10 years earlier, at the Centre Georges Pompidou in Paris. At that time, AI wasn't yet, of course, such a catchline as it is today and we're using something called Protégé, a so-called "ontology framework", based on a notation called OWL, which, among others, was proposing a new kind of web navigation.

I'm wondering what new opportunities these two technologies, AI and ontologic systems, might bring into the today's landscape ?