OpenAPI Meets Qwen: AI-Powered API Docs with Quarkus, LangChain4j & Ollama

Build dynamic APIs in dev and let Qwen do the documentation for you.

This tutorial shows how to dynamically generate API documentation using Quarkus, LangChain4j, the Qwen model from Ollama, and the OpenAPI output from Quarkus. In dev mode, the docs are generated on-the-fly. It's fast, flexible, and and a potential productivity booster.

If you want to jump right in, clone the complete tutorial application from my Github repository.

Project Setup and Qwen Integration

We start by scaffolding a new Quarkus app and wiring up the Ollama integration.

quarkus create app com.example.docgen:openapi-ollama-doc-generator \

--extension='quarkus-rest-jackson,quarkus-smallrye-openapi,quarkus-jackson,quarkus-rest-client-jackson,quarkus-cache,quarkus-langchain4j-ollama'

cd openapi-ollama-doc-generatorNext, configure application.properties to point to the Qwen model. If you have a local Ollama running, Quarkus automatically uses that. Otherwise, it spins up a Dev Services container with the model. As local models can respond slower, let’s adjust the timeout setting a little also.

quarkus.langchain4j.ollama.chat-model.model-name=qwen:7b-chat

quarkus.langchain4j.ollama.timeout=120sWith this in place, you're ready to talk to a local LLM that can generate beautiful API documentation based on your OpenAPI spec.

Exposing the API with OpenAPI Annotations

Now let's create a simple ProductResource class and annotate it for OpenAPI generation.

Your POJO goes in Product.java with standard schema annotations for the fields.

package com.example.docgen;

import org.eclipse.microprofile.openapi.annotations.media.Schema;

@Schema(description = "Represents a product in the inventory.")

public class Product {

@Schema(description = "Unique identifier of the product", example = "a1b2c3d4-e5f6-7890-1234-567890abcdef")

public String id;

@Schema(description = "Name of the product", example = "Wireless Headphones")

public String name;

@Schema(description = "Price of the product", example = "199.99")

public float price;

@Schema(description = "Detailed description of the product", nullable = true, example = "High-fidelity audio with noise-cancelling features.")

public String description;

public Product() {

}

public Product(String id, String name, float price, String description) {

this.id = id;

this.name = name;

this.price = price;

this.description = description;

}

// Getters and Setters (omitted for brevity, but needed for Jackson

// deserialization)

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public float getPrice() {

return price;

}

public void setPrice(float price) {

this.price = price;

}

public String getDescription() {

return description;

}

public void setDescription(String description) {

this.description = description;

}

@Override

public String toString() {

return "Product{" +

"id='" + id + '\'' +

", name='" + name + '\'' +

", price=" + price +

", description='" + description + '\'' +

'}';

}

}Then, your REST endpoint in ProductResource.java exposes three operations: list all products, get one by ID, and create a new one.

package com.example.docgen;

import java.util.ArrayList;

import java.util.List;

import java.util.UUID;

import java.util.concurrent.ConcurrentHashMap;

import org.eclipse.microprofile.openapi.annotations.OpenAPIDefinition;

import org.eclipse.microprofile.openapi.annotations.Operation;

import org.eclipse.microprofile.openapi.annotations.info.Info;

import org.eclipse.microprofile.openapi.annotations.media.Content;

import org.eclipse.microprofile.openapi.annotations.media.Schema;

import org.eclipse.microprofile.openapi.annotations.parameters.Parameter;

import org.eclipse.microprofile.openapi.annotations.parameters.RequestBody;

import org.eclipse.microprofile.openapi.annotations.responses.APIResponse;

import org.eclipse.microprofile.openapi.annotations.responses.APIResponses;

import org.eclipse.microprofile.openapi.annotations.tags.Tag;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.PathParam;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import jakarta.ws.rs.core.Response;

// Basic OpenAPI Definition for the whole API

@OpenAPIDefinition(info = @Info(title = "Product Catalog API", version = "1.0.0", description = "A simple API for managing products in an inventory."))

@Path("/products")

@Produces(MediaType.APPLICATION_JSON)

@Consumes(MediaType.APPLICATION_JSON)

@Tag(name = "Product Management", description = "Operations related to product catalog.")

public class ProductResource {

private static ConcurrentHashMap<String, Product> products = new ConcurrentHashMap<>();

static {

Product laptop = new Product(UUID.randomUUID().toString(), "Laptop Pro", 1899.99f,

"High-performance laptop for professional use.");

Product mouse = new Product(UUID.randomUUID().toString(), "Ergo Mouse", 49.99f,

"Ergonomic wireless mouse with advanced tracking.");

products.put(laptop.getId(), laptop);

products.put(mouse.getId(), mouse);

}

@GET

@Operation(summary = "Retrieve all products", description = "Returns a comprehensive list of all products currently in the catalog.")

@APIResponses(value = {

@APIResponse(responseCode = "200", description = "Successfully retrieved list of products", content = @Content(mediaType = MediaType.APPLICATION_JSON, schema = @Schema(implementation = Product[].class)))

})

public List<Product> getAllProducts() {

return new ArrayList<>(products.values());

}

@GET

@Path("/{id}")

@Operation(summary = "Retrieve a product by ID", description = "Fetches a single product's details using its unique identifier.")

@APIResponses(value = {

@APIResponse(responseCode = "200", description = "Product found and returned", content = @Content(mediaType = MediaType.APPLICATION_JSON, schema = @Schema(implementation = Product.class))),

@APIResponse(responseCode = "404", description = "Product with the specified ID not found")

})

public Response getProductById(

@Parameter(description = "Unique ID of the product to retrieve", required = true, example = "a1b2c3d4-e5f6-7890-1234-567890abcdef") @PathParam("id") String id) {

return products.values().stream()

.filter(p -> p.getId().equals(id))

.findFirst()

.map(p -> Response.ok(p).build())

.orElse(Response.status(Response.Status.NOT_FOUND).build());

}

@POST

@Operation(summary = "Create a new product", description = "Adds a new product to the catalog with a generated ID.")

@APIResponses(value = {

@APIResponse(responseCode = "201", description = "Product created successfully", content = @Content(mediaType = MediaType.APPLICATION_JSON, schema = @Schema(implementation = Product.class))),

@APIResponse(responseCode = "400", description = "Invalid product data provided")

})

public Response createProduct(

@RequestBody(description = "Product object to be created", required = true, content = @Content(mediaType = MediaType.APPLICATION_JSON, schema = @Schema(implementation = Product.class))) Product product) {

if (product.getName() == null || product.getName().isEmpty() || product.getPrice() <= 0) {

return Response.status(Response.Status.BAD_REQUEST).entity("Product name and price are required and valid.")

.build();

}

product.setId(UUID.randomUUID().toString()); // Assign a new ID

products.put(product.getId(), product);

System.out.println("Creating product: " + product);

return Response.status(Response.Status.CREATED).entity(product).build();

}

}With quarkus-smallrye-openapi, your annotated endpoints automatically generate /q/openapi in YAML and JSON. Run the app:

./mvnw quarkus:devThen check:

You’ve now got machine-readable API metadata ready for your LLM.

Using LangChain4j to Generate Docs

Create the OpenApiDocGenerator.java interface and annotate it with @RegisterAiService. The @SystemMessage describes the LLM’s persona: a technical writer who outputs Markdown. The @UserMessage passes the OpenAPI spec as a string.

package com.example.docgen;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService

public interface OpenApiDocGenerator {

@SystemMessage("""

You are an expert technical writer specializing in API documentation.

Your task is to take an OpenAPI (Swagger) specification provided in YAML or JSON format

and generate clear, concise, and helpful documentation for a human user.

Focus on providing explanations, practical usage examples (like `curl` commands),

and clarifying complex parts.

The output MUST be in well-formatted Markdown.

Ensure all endpoints, their HTTP methods, path parameters, query parameters,

request bodies (with example JSON), and possible responses (with example JSON)

are clearly described.

Start with an 'Overview' section, then a 'Getting Started' section (e.g., base URL, authentication if applicable - though not in this spec),

and then list each API endpoint with its details.

""")

@UserMessage("""

Generate comprehensive documentation for the following OpenAPI specification.

OpenAPI Spec:

```

{openApiSpec}

```

""")

String generateDocumentation(String openApiSpec);

}LangChain4j handles the rest and binds the interface to your Ollama model and formats the result.

This is where the magic happens: your OpenAPI YAML becomes readable, Markdown-based documentation with examples and descriptions.

Dynamic Doc Generation

We use Quarkus’s MicroProfile REST Client to call the /q/openapi endpoint, and cache the results to avoid repeated calls to the LLM.

Your REST client interface (OpenApiRestClient) fetches the spec.

package com.example.docgen;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

import org.eclipse.microprofile.rest.client.inject.RegisterRestClient;

@RegisterRestClient(baseUri = "http://localhost:8080") // Points to our own application

@Path("/q/openapi")

public interface OpenApiRestClient {

@GET

@Produces("application/yaml") // Quarkus's default OpenAPI format is YAML

String getOpenApiYaml();

@GET

@Produces(MediaType.APPLICATION_JSON)

String getOpenApiJson(@QueryParam("format") String format); // Allows requesting JSON explicitly

}Your DocumentationResource reads the spec, calls the AI service, and returns the result at /docs.

package com.example.docgen;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import jakarta.ws.rs.core.Response;

import org.eclipse.microprofile.rest.client.inject.RestClient;

import io.quarkus.cache.CacheResult; // For caching

@Path("/docs")

public class DocumentationResource {

@Inject

OpenApiDocGenerator openApiDocGenerator; // Our LLM service

@Inject

@RestClient // Our REST client to fetch /q/openapi

OpenApiRestClient openApiRestClient;

/**

* Generates and returns OpenAPI documentation in Markdown format.

* Caches the result to avoid repeated LLM calls during development.

*/

@GET

@Produces(MediaType.TEXT_PLAIN) // Markdown is plain text

@CacheResult(cacheName = "api-docs-cache") // Cache the generated documentation

public Response getGeneratedDocumentation() {

try {

// Fetch the live OpenAPI spec from Quarkus's endpoint

String openApiSpec = openApiRestClient.getOpenApiYaml();

System.out.println("Fetched OpenAPI Spec (first 200 chars): "

+ openApiSpec.substring(0, Math.min(openApiSpec.length(), 200)) + "...");

// Use LangChain4j to generate documentation using Qwen

String generatedDocs = openApiDocGenerator.generateDocumentation(openApiSpec);

return Response.ok(generatedDocs).build();

} catch (Exception e) {

e.printStackTrace();

return Response.serverError().entity("Error generating documentation: " + e.getMessage()).build();

}

}

}You now have dynamic, AI-generated docs at:

http://localhost:8080/docsThe first request might take a while, all subsequent ones will be cached. You can configure the cache. Learn more about Quarkus Caching in this detailed guide.

quarkus.cache.caffeine.api-docs-cache.initial-capacity=10

quarkus.cache.caffeine.api-docs-cache.maximum-size=1Improve Documentation Display with a Web Page

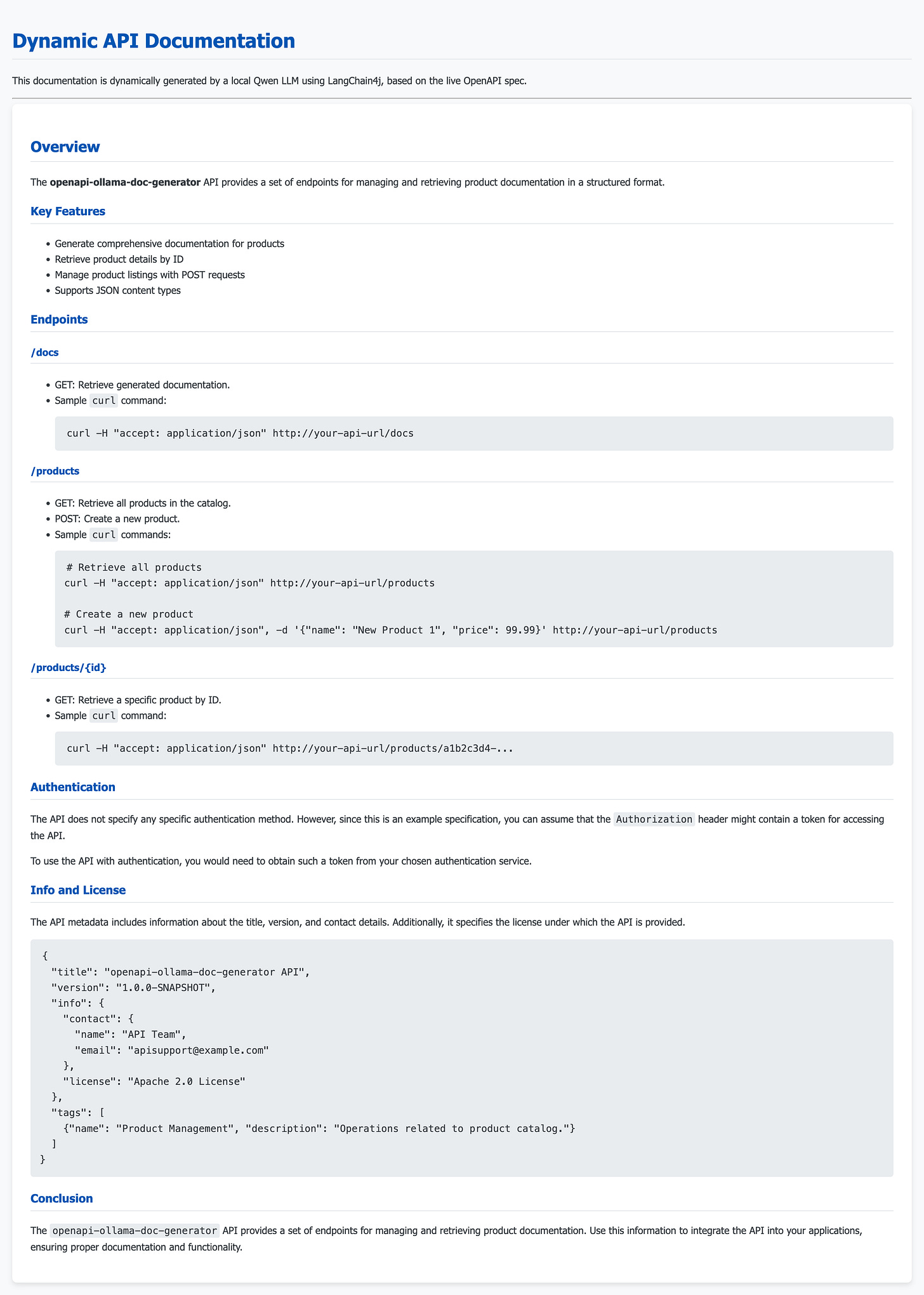

Serving raw Markdown is functional but not very user-friendly. Let's create a simple HTML page that uses a JavaScript library to render the Markdown from our /docs endpoint into a nicely formatted web page.

Create src/main/resources/META-INF/resources/index.html:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>API Documentation</title>

<script src="https://cdn.jsdelivr.net/npm/marked/marked.min.js"></script>

<style>

<!-- omitted for brevity, check repo -->

</style>

</head>

<body>

<h1>Dynamic API Documentation</h1>

<p>This documentation is dynamically generated by a local Qwen LLM using LangChain4j, based on the live OpenAPI spec.</p>

<hr/>

<div id="markdown-content">Loading documentation...</div>

<script>

// Fetch the markdown from our /docs endpoint and render it

fetch('/docs')

.then(response => {

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

return response.text();

})

.then(markdown => {

// Render the Markdown using Marked.js

document.getElementById('markdown-content').innerHTML = marked.parse(markdown);

})

.catch(error => {

console.error('Error fetching or parsing documentation:', error);

document.getElementById('markdown-content').innerHTML = '<p style="color: red;">Error loading documentation. Please check your console and ensure Ollama is running with the Qwen model.</p>';

});

</script>

</body>

</html>Once the application starts, open your browser and navigate to: http://localhost:8080/

You should now see a nicely rendered web page displaying your API documentation. The first request to /docs (triggered by the fetch in index.html) might take some time as it involves an LLM call to Ollama. Subsequent requests, or page refreshes, will be much faster thanks to the @CacheResult annotation.

LLM Prompt Engineering for Better Docs

The quality of the generated documentation heavily depends on the prompt you provide. Don't be afraid to iterate!

Be Specific: If the initial output is too brief, tell Qwen to be "detailed and verbose."

Request Specific Sections: Ask for sections like "Authentication," "Error Codes," or "Versioning."

Guide Examples: Instruct the LLM on the format of code examples (e.g., "Provide

curlcommands andJava HttpClientsnippets").Control Tone and Style: Ask it to write in a "formal, technical, and approachable tone."

Model Choice: If

qwen:7b-chatisn't sufficient for complex documentation, consider larger Qwen models (e.g.,qwen:14b-chat, if your hardware allows) or other models available on Ollama.

Scalability and Production Readiness

While this tutorial focuses on dynamic generation for development, for a production environment, you would typically:

Pre-generate Docs at Build-Time: The dynamic LLM call introduces latency and a dependency on Ollama at runtime. For production, you'd integrate the documentation generation into your CI/CD pipeline (e.g., as a Maven build step), generating a static Markdown file that's then served. This avoids runtime LLM calls and associated resource consumption.

Security: If your

/docsendpoint were publicly exposed, consider authentication or limiting access to it (e.g., only from internal networks).Version Control: The generated Markdown file (if you opt for build-time generation) should be committed to your Git repository, ensuring documentation is versioned alongside your code.

Going Further

This setup gives you a solid first idea for documentation generation. But there’s more you can do:

Switch Models: Experiment with different Ollama models if Qwen isn’t expressive enough.

CI/CD Integration: Turn the doc generation into a CI/CD step by creating a command line app that takes an openapi.json and turns it into static documentation

Conclusion

You now have an LLM-powered documentation workflow that adapts to your environment.

With Quarkus, Ollama, and LangChain4j, generating Markdown documentation straight from your OpenAPI spec can even become part of your build pipeline. No online API tools. No manual editing. Just consistent, AI-powered output that stays in sync with your code.