Semantic Caching for Java: Turbocharge Your Quarkus + LangChain4j LLM Apps

A practical hands-onLearn how to add fast, meaning-aware caching to your local LLM workflows using pgvector, embeddings, and a clean proxy wrapper pattern. tutorial for Java developers

I’ve always loved caching. It was one of the first “real” performance tricks I learned as a junior developer. The first time I wrapped an expensive calculation in a simple HashMap and saw the latency drop from seconds to milliseconds, it felt like magic.

It also made me feel clever, which is a dangerous thing to give a young developer.

But caching only looks simple from the outside. The moment you scratch beneath the surface, the hard questions appear.

How long is the data valid? What counts as the same input? When do you invalidate entries? What happens if the underlying system changes?

These questions follow you throughout your career, whether you’re building APIs, microservices, or distributed systems.

Working with LLMs makes all of this ten times harder.

With traditional caching, the basic contract is predictable:

same input → same output.

LLMs break that rule immediately.

Two prompts that look different may mean the same thing.

Two prompts that look almost identical may produce very different answers, especially if temperature or system messages change.

And even if everything is identical, the model might still produce a slightly different response because sampling is probabilistic.

On top of that, your application rarely sends raw prompts. It sends prompts influenced by hyperparameters, system messages, conversation history, or user context. Suddenly the meaning of “cache key” becomes a philosophical exercise.

All of this leads to one conclusion:

Caching LLM responses isn’t just a technical problem. It’s a semantic problem.

That’s why semantic caching is so interesting. It doesn’t try to force LLMs into deterministic boxes. Instead, it embraces their shape-shifting nature by comparing meaning, not text. And that lets us reuse expensive LLM calls even when surface-level prompts differ.

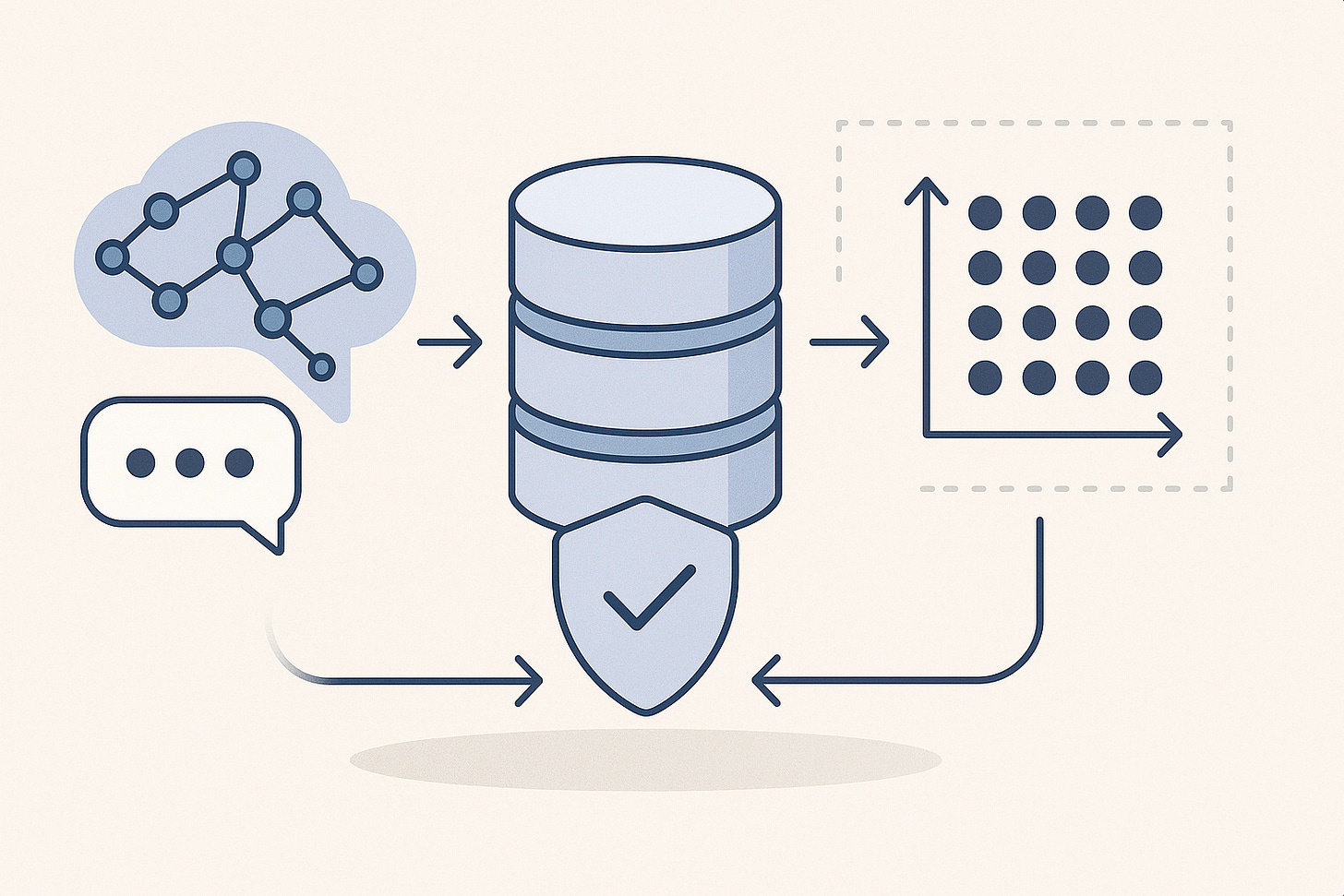

In this article, we’ll build a complete semantic caching layer in Quarkus using LangChain4j, pgvector, Ollama, and a dynamic proxy that wraps your AI service without requiring any changes to its interface.

You’ll learn the full chain: extracting prompts, embedding them, storing vectors, comparing similarity, and returning cached responses when appropriate.

This isn’t about creating a “perfect” cache.

It’s about understanding the mechanics well enough that you can shape your own strategy—one that fits how your application thinks, how your users behave, and how your model interprets meaning.

Let’s get started.

What You Will Build

You will create a semantic caching wrapper around LangChain4j AI services. The wrapper:

intercepts calls to your

@RegisterAiServiceinterfaceextracts prompts from

@UserMessagehashes prompts for exact-match lookup

embeds prompts and writes vectors to PostgreSQL using pgvector

retries cached responses based on similarity score

stores LLM responses as JSON

allows configurable TTL, embedding model, and strategy

supports per-user namespacing

supports cache invalidation and statistics

The important part: You do not modify your AI service interface at all.

The wrapper sits around it like a transparent layer.

Prerequisites and Setup

Requirements:

Java 21+

Quarkus 3.29+ (or latest)

PostgreSQL with pgvector installed

Basic familiarity with LangChain4j AI services

Podman (or Docker) for running Quarkus Dev Services

Before writing a single line of code, create a Quarkus project that includes all necessary extensions. If you are just after the code, look at the corresponding Github repository.

Create the project with required extensions

quarkus create app com.example.ai.cache:semantic-cache-demo \

--extensions='

langchain4j-ollama,

langchain4j-pgvector,

jdbc-postgresql,

hibernate-orm-panache,

rest-jackson'

cd semantic-cache-demoWhy these extensions:

langchain4j-ollama – core AI services for Ollama models

langchain4j-pgvector - PG_vector support for Postgresql

jdbc-postgresql – JDBC datasource for the cache store

rest-jackson – JSON serialization for REST resources

Add Apache Commons Codec to your pom.xml:

<dependency>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

<version>1.20.0</version>

</dependency>You now have a ready-to-build Quarkus AI project.

Configure Quarkus

In application.properties:

# Database Kind is not strictly neccessary if you only have one. But I

# like to keep it for clarity.

quarkus.datasource.db-kind=postgresql

# We'll use the Granit4 model locally

quarkus.langchain4j.ollama.chat-model.model-name=granite4:latest

# And the corresponding granite embedding model

quarkus.langchain4j.ollama.embedding-model.model-name=granite-embedding:30m

# Embedding vector dimentsion

quarkus.langchain4j.pgvector.dimension=384

# If you want to see what is going on, enable the logs.

quarkus.langchain4j.log-requests=false

quarkus.langchain4j.log-responses=falseThese properties configure Postgres, select Granite models for chat and embeddings, and enable logging so you can inspect how your semantic cache interacts with the LLM.

Step-by-Step Implementation

We now walk through the implementation. Each component has its purpose and role.

SemanticCacheConfig

Controls caching behavior per AI service instance. This immutable configuration class defines the similarity threshold for semantic matching, cache entry TTL, embedding model selection, and caching strategy (exact match, semantic similarity, or hybrid).

Instances are created using the builder pattern to configure cache parameters for different AI service use cases.

Create file: src/main/java/com/example/ai/cache/SemanticCacheConfig.java

package com.example.ai.cache;

import java.time.Duration;

public class SemanticCacheConfig {

private final boolean enabled;

private final double similarityThreshold;

private final Duration ttl;

private final String embeddingModelName;

private final CacheStrategy strategy;

private SemanticCacheConfig(Builder builder) {

this.enabled = builder.enabled;

this.similarityThreshold = builder.similarityThreshold;

this.ttl = builder.ttl;

this.embeddingModelName = builder.embeddingModelName;

this.strategy = builder.strategy;

}

public static Builder builder() {

return new Builder();

}

public boolean isEnabled() {

return enabled;

}

public double getSimilarityThreshold() {

return similarityThreshold;

}

public Duration getTtl() {

return ttl;

}

public String getEmbeddingModelName() {

return embeddingModelName;

}

public CacheStrategy getStrategy() {

return strategy;

}

public static class Builder {

private boolean enabled = true;

private double similarityThreshold = 0.85;

private Duration ttl = Duration.ofHours(1);

private String embeddingModelName = “default”;

private CacheStrategy strategy = CacheStrategy.SEMANTIC_ONLY;

public Builder enabled(boolean enabled) {

this.enabled = enabled;

return this;

}

public Builder similarityThreshold(double threshold) {

this.similarityThreshold = threshold;

return this;

}

public Builder ttl(Duration ttl) {

this.ttl = ttl;

return this;

}

public Builder embeddingModelName(String name) {

this.embeddingModelName = name;

return this;

}

public Builder strategy(CacheStrategy strategy) {

this.strategy = strategy;

return this;

}

public SemanticCacheConfig build() {

return new SemanticCacheConfig(this);

}

}

public enum CacheStrategy {

EXACT_MATCH_ONLY, // Fast hash-based lookup only

SEMANTIC_ONLY, // Vector similarity only

HYBRID // Try exact match first, then semantic

}

}This is your hyperparameter control center:

similarity threshold

TTL

strategy (exact, semantic, hybrid)

embedding model name

Subtle changes to these values influence cache behavior:

Too high threshold → fewer hits.

Too low threshold → incorrect matches.

Short TTL → fresh data, less caching.

Long TTL → stale data but higher hit rate.

The builder pattern makes it simple to experiment.

SemanticCacheStore Interface

Defines how the cache is used, not how it is persisted. This interface abstracts the semantic cache operations, lookup, store, clear, and statistics, allowing different storage backends (e.g., PostgreSQL, Redis) to be implemented while keeping the caching logic independent of the underlying persistence mechanism.

Create file: src/main/java/com/example/ai/cache/store/SemanticCacheStore.java

package com.example.ai.cache.store;

import java.util.Optional;

import com.example.ai.cache.CacheStatistics;

import com.example.ai.cache.CachedResponse;

import com.example.ai.cache.SemanticCacheConfig;

public interface SemanticCacheStore {

/**

* Look up a cached response based on semantic similarity

*/

Optional<CachedResponse> lookup(

String prompt,

String llmString,

SemanticCacheConfig config);

/**

* Store a response in the cache

*/

void store(

String prompt,

String llmString,

Object response,

SemanticCacheConfig config);

/**

* Clear all cache entries

*/

void clear();

/**

* Clear expired entries

*/

void evictExpired();

/**

* Get cache statistics

*/

CacheStatistics getStatistics();

}DTOs for Responses and Statistics

The CacheStatistics class is a thread-safe statistics tracker for semantic cache operations. Tracks cache hits (exact and semantic), misses, operation timing, and entry counts using atomic operations. Calculates derived metrics like hit rates and requests per second, and provides immutable snapshots for consistent reporting in concurrent environments.

Shortened here. Go grab the full implementation from my Github!

Create file: src/main/java/com/example/ai/cache/CacheStatistics.java

package com.example.ai.cache;

public class CacheStatistics {

private final AtomicLong totalHits = new AtomicLong(0);

private final AtomicLong totalMisses = new AtomicLong(0);

private final AtomicLong exactMatchHits = new AtomicLong(0);

private final AtomicLong semanticMatchHits = new AtomicLong(0);

private final AtomicLong totalEntries = new AtomicLong(0);

private final AtomicLong totalLookupTimeMs = new AtomicLong(0);

private final AtomicLong totalStoreTimeMs = new AtomicLong(0);

private final Instant startTime;

// Constructor, getters, and increment methods

public void recordHit(boolean exactMatch) {

hits++;

if (exactMatch)

exactMatchHits++;

else

semanticMatchHits++;

}

public void recordMiss() {

misses++;

}

public double getHitRate() {

long total = hits + misses;

return total == 0 ? 0.0 : (double) hits / total;

}

// Other getters...

}The CachedResponse class is an immutable value object representing a cached AI service response with matching metadata. Contains the cached response, creation timestamp, similarity score, the matched prompt, and whether the match was exact or semantic, providing transparency about cache hit quality and relevance.

Create file: src/main/java/com/example/ai/cache/CachedResponse.java

package com.example.ai.cache;

import java.time.Instant;

public class CachedResponse {

private final Object response;

private final Instant createdAt;

private final double similarityScore;

private final String matchedPrompt;

private final boolean exactMatch;

public CachedResponse(Object response, Instant createdAt,

double similarityScore, String matchedPrompt,

boolean exactMatch) {

this.response = response;

this.createdAt = createdAt;

this.similarityScore = similarityScore;

this.matchedPrompt = matchedPrompt;

this.exactMatch = exactMatch;

}

// Getters

public Object getResponse() {

return response;

}

public Instant getCreatedAt() {

return createdAt;

}

public double getSimilarityScore() {

return similarityScore;

}

public String getMatchedPrompt() {

return matchedPrompt;

}

public boolean isExactMatch() {

return exactMatch;

}

}PgVectorSemanticCacheStore

The heart of the cache: PostgreSQL-based implementation of SemanticCacheStore using pgvector for semantic similarity search. Provides exact-match lookups via SHA-256 hash comparison and semantic lookups through vector similarity search. Transforms prompts into embeddings, stores vectors in PostgreSQL, and queries for similar vectors. Stores responses as JSONB (supporting any LangChain4j return type), handles TTL expiration, and maintains cache statistics. Handles serialization/deserialization of responses and embedding model registration.

Create file: src/main/java/com/example/ai/cache/store/PgVectorSemanticCacheStore.java

package com.example.ai.cache.store;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.Optional;

import org.apache.commons.codec.digest.DigestUtils;

import com.example.ai.cache.CacheStatistics;

import com.example.ai.cache.CachedResponse;

import com.example.ai.cache.SemanticCacheConfig;

import com.fasterxml.jackson.databind.ObjectMapper;

import dev.langchain4j.data.embedding.Embedding;

import dev.langchain4j.model.embedding.EmbeddingModel;

import io.agroal.api.AgroalDataSource;

import io.quarkus.logging.Log;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class PgVectorSemanticCacheStore implements SemanticCacheStore {

@Inject

AgroalDataSource dataSource;

@Inject

ObjectMapper objectMapper;

private final CacheStatistics statistics = new CacheStatistics();

// Map to hold multiple embedding models

private final java.util.Map<String, EmbeddingModel> embeddingModels = new java.util.concurrent.ConcurrentHashMap<>();

public void registerEmbeddingModel(String name, EmbeddingModel model) {

embeddingModels.put(name, model);

}

@Override

public Optional<CachedResponse> lookup(

String prompt,

String llmString,

SemanticCacheConfig config) {

if (!config.isEnabled()) {

return Optional.empty();

}

long startTime = System.currentTimeMillis();

try {

// Try exact match first for HYBRID strategy

if (config.getStrategy() == SemanticCacheConfig.CacheStrategy.HYBRID ||

config.getStrategy() == SemanticCacheConfig.CacheStrategy.EXACT_MATCH_ONLY) {

Optional<CachedResponse> exactMatch = lookupExact(prompt, llmString, config);

if (exactMatch.isPresent()) {

statistics.recordHit(true);

statistics.recordLookupTime(System.currentTimeMillis() - startTime);

return exactMatch;

}

if (config.getStrategy() == SemanticCacheConfig.CacheStrategy.EXACT_MATCH_ONLY) {

statistics.recordMiss();

statistics.recordLookupTime(System.currentTimeMillis() - startTime);

return Optional.empty();

}

}

// Semantic search

Optional<CachedResponse> semanticMatch = lookupSemantic(prompt, llmString, config);

if (semanticMatch.isPresent()) {

statistics.recordHit(false);

statistics.recordLookupTime(System.currentTimeMillis() - startTime);

return semanticMatch;

}

statistics.recordMiss();

statistics.recordLookupTime(System.currentTimeMillis() - startTime);

return Optional.empty();

} catch (Exception e) {

Log.error(”Error looking up cache entry”, e);

statistics.recordMiss();

statistics.recordLookupTime(System.currentTimeMillis() - startTime);

return Optional.empty();

}

}

private Optional<CachedResponse> lookupExact(

String prompt,

String llmString,

SemanticCacheConfig config) throws SQLException {

String promptHash = computeHash(prompt, llmString);

String sql = “”“

SELECT response_json, created_at, prompt

FROM semantic_cache

WHERE prompt_hash = ?

AND llm_string = ?

AND is_expired(created_at, ttl_seconds) = false

LIMIT 1

“”“;

try (Connection conn = dataSource.getConnection();

PreparedStatement stmt = conn.prepareStatement(sql)) {

stmt.setString(1, promptHash);

stmt.setString(2, llmString);

ResultSet rs = stmt.executeQuery();

if (rs.next()) {

try {

Object response = deserializeResponse(rs.getString(”response_json”));

return Optional.of(new CachedResponse(

response,

rs.getTimestamp(”created_at”).toInstant(),

1.0, // Perfect match

rs.getString(”prompt”),

true));

} catch (Exception e) {

Log.error(”Error deserializing response”, e);

return Optional.empty();

}

}

}

return Optional.empty();

}

private Optional<CachedResponse> lookupSemantic(

String prompt,

String llmString,

SemanticCacheConfig config) throws SQLException {

EmbeddingModel embeddingModel = embeddingModels.get(config.getEmbeddingModelName());

if (embeddingModel == null) {

Log.warn(”Embedding model not found: “ + config.getEmbeddingModelName());

return Optional.empty();

}

// Generate embedding for the prompt

Embedding embedding = embeddingModel.embed(prompt).content();

float[] promptEmbedding = embedding.vector();

// Use cosine similarity (1 - cosine distance)

// Optimized: use CTE to bind vector once and reference it multiple times

String sql = “”“

WITH query_vector AS (

SELECT ?::vector AS vec

)

SELECT

response_json,

created_at,

prompt,

1 - (embedding <=> (SELECT vec FROM query_vector)) as similarity

FROM semantic_cache, query_vector

WHERE llm_string = ?

AND 1 - (embedding <=> query_vector.vec) >= ?

AND is_expired(created_at, ttl_seconds) = false

ORDER BY embedding <=> query_vector.vec

LIMIT 1

“”“;

try (Connection conn = dataSource.getConnection();

PreparedStatement stmt = conn.prepareStatement(sql)) {

String vectorStr = vectorToString(promptEmbedding);

stmt.setString(1, vectorStr); // Bind vector once

stmt.setString(2, llmString);

stmt.setDouble(3, config.getSimilarityThreshold());

ResultSet rs = stmt.executeQuery();

if (rs.next()) {

try {

Object response = deserializeResponse(rs.getString(”response_json”));

return Optional.of(new CachedResponse(

response,

rs.getTimestamp(”created_at”).toInstant(),

rs.getDouble(”similarity”),

rs.getString(”prompt”),

false));

} catch (Exception e) {

Log.error(”Error deserializing response”, e);

return Optional.empty();

}

}

}

return Optional.empty();

}

@Override

public void store(

String prompt,

String llmString,

Object response,

SemanticCacheConfig config) {

if (!config.isEnabled()) {

return;

}

long startTime = System.currentTimeMillis();

try {

EmbeddingModel embeddingModel = embeddingModels.get(config.getEmbeddingModelName());

if (embeddingModel == null) {

Log.warn(”Embedding model not found: “ + config.getEmbeddingModelName());

return;

}

float[] embedding = embeddingModel.embed(prompt).content().vector();

String promptHash = computeHash(prompt, llmString);

String responseJson = serializeResponse(response);

String sql = “”“

INSERT INTO semantic_cache

(prompt_hash, prompt, llm_string, embedding, response_json, ttl_seconds, created_at)

VALUES (?, ?, ?, ?::vector, ?::jsonb, ?, NOW())

ON CONFLICT (prompt_hash, llm_string)

DO UPDATE SET

response_json = EXCLUDED.response_json,

created_at = NOW()

“”“;

try (Connection conn = dataSource.getConnection();

PreparedStatement stmt = conn.prepareStatement(sql)) {

stmt.setString(1, promptHash);

stmt.setString(2, prompt);

stmt.setString(3, llmString);

stmt.setString(4, vectorToString(embedding));

stmt.setString(5, responseJson);

stmt.setInt(6, (int) config.getTtl().getSeconds());

stmt.executeUpdate();

statistics.incrementEntryCount();

}

statistics.recordStoreTime(System.currentTimeMillis() - startTime);

} catch (Exception e) {

Log.error(”Error storing cache entry”, e);

statistics.recordStoreTime(System.currentTimeMillis() - startTime);

}

}

@Override

public void clear() {

try (Connection conn = dataSource.getConnection();

Statement stmt = conn.createStatement()) {

stmt.execute(”TRUNCATE TABLE semantic_cache”);

} catch (SQLException e) {

Log.error(”Error clearing cache”, e);

}

}

@Override

public void evictExpired() {

String sql = “DELETE FROM semantic_cache WHERE is_expired(created_at, ttl_seconds) = true”;

try (Connection conn = dataSource.getConnection();

Statement stmt = conn.createStatement()) {

int deleted = stmt.executeUpdate(sql);

Log.info(”Evicted “ + deleted + “ expired cache entries”);

} catch (SQLException e) {

Log.error(”Error evicting expired entries”, e);

}

}

@Override

public CacheStatistics getStatistics() {

// Update entry count before returning

updateEntryCount();

return statistics;

}

public long getEntryCount() {

try (Connection conn = dataSource.getConnection();

Statement stmt = conn.createStatement()) {

ResultSet rs = stmt.executeQuery(

“SELECT COUNT(*) FROM semantic_cache WHERE is_expired(created_at, ttl_seconds) = false”);

if (rs.next()) {

return rs.getLong(1);

}

} catch (SQLException e) {

Log.error(”Error getting entry count”, e);

}

return 0;

}

private void updateEntryCount() {

long count = getEntryCount();

statistics.updateEntryCount(count);

}

// Helper methods

private String computeHash(String prompt, String llmString) {

return DigestUtils.sha256Hex(prompt + “|” + llmString);

}

private String vectorToString(float[] vector) {

if (vector == null || vector.length == 0) {

return “[]”;

}

// Pre-size StringBuilder: “[”, “]”, and commas = 2 + (length-1)

// Average float string length ~8 chars, but we’ll be conservative

StringBuilder sb = new StringBuilder(vector.length * 10);

sb.append(’[’);

sb.append(vector[0]);

for (int i = 1; i < vector.length; i++) {

sb.append(’,’);

sb.append(vector[i]);

}

sb.append(’]’);

return sb.toString();

}

private String serializeResponse(Object response) throws Exception {

return objectMapper.writeValueAsString(response);

}

private Object deserializeResponse(String json) throws Exception {

// You may need to handle type information for proper deserialization

return objectMapper.readValue(json, Object.class);

}

}This implementation does several key things:

Exact-match lookup: Fast SHA-256 hash comparison using DigestUtils.sha256Hex() for prompt+llmString combination.

Semantic lookup: Computes embedding via EmbeddingModel.embed() → performs vector similarity search using pgvector’s cosine distance operator (<=>) → returns matches above the configured similarity threshold.

JSON object storage: Responses stored as JSONB (PostgreSQL’s binary JSON format) allow storage of any LangChain4j return type, serialized via Jackson’s ObjectMapper.

TTL expiration: The is_expired(...) database helper function filters expired entries during lookups and enables bulk eviction of expired cache entries.

Statistics: Tracks cache efficiency over time including hits (exact vs semantic), misses, lookup/store times, entry counts, and calculated metrics like hit rate.

This class introduces the main logic of:

Transforming prompts into embeddings: Uses registered EmbeddingModel instances to convert prompt strings into vector embeddings.

Storing vectors: Persists embeddings as PostgreSQL vector type along with prompt hash, original prompt, LLM identifier, and serialized response.

Querying for similar vectors: Performs cosine similarity search using pgvector’s HNSW index, filtering by similarity threshold and TTL expiration.

Serializing/deserializing responses: Uses Jackson’s ObjectMapper to convert response objects to/from JSONB format for storage and retrieval.

SemanticCacheInvocationHandler

Automatic interception of AI method calls. This Java dynamic proxy InvocationHandler intercepts method invocations on AI services, extracts prompts from parameters, and performs cache lookups before invoking the underlying service. Returns cached responses on hits, or invokes the service and caches the result on misses. Handles complex method resolution for proxy classes and various class hierarchies.

Create file: src/main/java/com/example/ai/cache/proxy/SemanticCacheInvocationHandler.java

package com.example.ai.cache.proxy;

import java.lang.reflect.InvocationHandler;

import java.lang.reflect.Method;

import java.lang.reflect.Parameter;

import java.lang.reflect.Proxy;

import java.util.Arrays;

import java.util.stream.Collectors;

import com.example.ai.cache.SemanticCacheConfig;

import com.example.ai.cache.store.SemanticCacheStore;

import io.quarkus.logging.Log;

public class SemanticCacheInvocationHandler implements InvocationHandler {

private final Object target;

private final SemanticCacheStore cacheStore;

private final SemanticCacheConfig config;

public SemanticCacheInvocationHandler(

Object target,

SemanticCacheStore cacheStore,

SemanticCacheConfig config) {

this.target = target;

this.cacheStore = cacheStore;

this.config = config;

}

@Override

public Object invoke(Object proxy, Method method, Object[] args) throws Throwable {

// Skip caching for Object methods

if (method.getDeclaringClass() == Object.class) {

Method targetMethod = findTargetMethod(method);

return targetMethod.invoke(target, args);

}

// Extract prompt and build LLM string

String prompt = extractPrompt(method, args);

String llmString = buildLlmString(target, method);

Log.debugf(”Cache lookup for method %s with prompt: %s”,

method.getName(), prompt);

// Try cache lookup

var cached = cacheStore.lookup(prompt, llmString, config);

if (cached.isPresent()) {

Log.infof(”Cache hit (similarity: %.3f, exact: %b) for method %s”,

cached.get().getSimilarityScore(),

cached.get().isExactMatch(),

method.getName());

return cached.get().getResponse();

}

Log.infof(”Cache miss for method %s, invoking AI service”, method.getName());

// Execute actual method

// Find the corresponding method on the target class to avoid

// IllegalAccessException

Method targetMethod = findTargetMethod(method);

Object response = targetMethod.invoke(target, args);

// Store in cache

cacheStore.store(prompt, llmString, response, config);

return response;

}

private String extractPrompt(Method method, Object[] args) {

if (args == null || args.length == 0) {

return “”;

}

// Check for @UserMessage or @SystemMessage annotations

Parameter[] parameters = method.getParameters();

for (int i = 0; i < parameters.length; i++) {

if (isPromptParameter(parameters[i])) {

return String.valueOf(args[i]);

}

}

// Fallback: concatenate all string arguments

return Arrays.stream(args)

.filter(arg -> arg instanceof String)

.map(String::valueOf)

.collect(Collectors.joining(” “));

}

private boolean isPromptParameter(Parameter parameter) {

// Check for langchain4j annotations

return Arrays.stream(parameter.getAnnotations())

.anyMatch(ann -> ann.annotationType().getName().contains(”UserMessage”) ||

ann.annotationType().getName().contains(”SystemMessage”));

}

private String buildLlmString(Object target, Method method) {

// Build a string that uniquely identifies the LLM configuration

// Include: service class, method name, model configuration

return String.format(”%s.%s”,

target.getClass().getSimpleName(),

method.getName());

}

/**

* Find the corresponding method on the target class.

* This is necessary because the method parameter is from the interface,

* but we need to invoke it on the concrete target implementation.

*

* If the target is a proxy (like LangChain4j AI services), we need to

* find the actual method on the target class, not use the interface method

* directly.

*/

private Method findTargetMethod(Method interfaceMethod) throws NoSuchMethodException {

Class<?> targetClass = target.getClass();

Class<?>[] parameterTypes = interfaceMethod.getParameterTypes();

// Check if target is a proxy (common for Quarkus/LangChain4j services)

if (Proxy.isProxyClass(targetClass)) {

// For proxies, we can use the interface method directly

// but we need to ensure it’s accessible

try {

interfaceMethod.setAccessible(true);

return interfaceMethod;

} catch (Exception e) {

// If that fails, try to find it through the proxy’s interfaces

for (Class<?> iface : targetClass.getInterfaces()) {

try {

Method m = iface.getMethod(interfaceMethod.getName(), parameterTypes);

m.setAccessible(true);

return m;

} catch (NoSuchMethodException ignored) {

// Continue

}

}

}

}

try {

// Try to find the method directly on the target class

Method targetMethod = targetClass.getMethod(

interfaceMethod.getName(),

parameterTypes);

targetMethod.setAccessible(true);

return targetMethod;

} catch (NoSuchMethodException e) {

// If not found, try to find it in the class hierarchy

// This handles cases where the target has a different structure

Class<?> currentClass = targetClass;

while (currentClass != null && currentClass != Object.class) {

try {

Method targetMethod = currentClass.getDeclaredMethod(

interfaceMethod.getName(),

parameterTypes);

targetMethod.setAccessible(true);

return targetMethod;

} catch (NoSuchMethodException ignored) {

// Continue searching up the hierarchy

}

currentClass = currentClass.getSuperclass();

}

// If still not found, try to find by name and parameter count only

// (less strict matching for proxy classes)

for (Method m : targetClass.getMethods()) {

if (m.getName().equals(interfaceMethod.getName()) &&

m.getParameterCount() == parameterTypes.length) {

m.setAccessible(true);

return m;

}

}

// Last resort: use the interface method directly

// This should work if the target implements the interface

interfaceMethod.setAccessible(true);

return interfaceMethod;

}

}

}This dynamic proxy:

Detects LLM method calls: Implements InvocationHandler.invoke() to intercept all method invocations on the AI service interface (skips Object methods like toString(), equals(), etc.).

Extracts prompt strings: Extracts prompts from method parameters by checking for LangChain4j annotations (@UserMessage/@SystemMessage) or falling back to concatenating all string arguments.

Forms a unique LLM identity string (service + method): Builds an identifier string in the format “ClassName.methodName” to distinguish between different AI service configurations and methods.

Queries the cache: Performs cache lookup using the extracted prompt and LLM identity string before invoking the actual service.

Logs hits/misses: Logs cache lookups at DEBUG level, and cache hits (with similarity score and exact match flag) and misses at INFO level for observability.

Calls the real AI service when needed: Invokes the underlying AI service method only on cache misses, using intelligent method resolution to handle proxy classes and class hierarchies.

Stores new results: Automatically stores the AI service response in the cache after successful invocation for future lookups.

The proxy is what makes caching transparent: your service interface remains untouched. The dynamic proxy pattern allows caching to be added as a wrapper layer without requiring any modifications to the original AI service interface or implementation.

Per-User Caching

Add a user identifier to the key:

String llmString = userId + “:” + buildLlmString(...);This prevents data leakage across tenants and lets you cache both globally-shared and user-specific answers.

CachedAiServiceFactory

Developer-friendly factory for building cached wrappers.

Create file: src/main/java/com/example/ai/cache/CachedAiServiceFactory.java

package com.example.ai.cache;

import java.lang.reflect.Proxy;

import com.example.ai.cache.proxy.SemanticCacheInvocationHandler;

import com.example.ai.cache.store.SemanticCacheStore;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class CachedAiServiceFactory {

@Inject

SemanticCacheStore cacheStore;

/**

* Wrap an AI service with semantic caching

*/

@SuppressWarnings(”unchecked”)

public <T> T createCached(T aiService, SemanticCacheConfig config) {

Class<?> serviceClass = aiService.getClass();

Class<?>[] interfaces = getAllInterfaces(serviceClass);

if (interfaces.length == 0) {

throw new IllegalArgumentException(

“AI service must implement at least one interface”);

}

return (T) Proxy.newProxyInstance(

serviceClass.getClassLoader(),

interfaces,

new SemanticCacheInvocationHandler(aiService, cacheStore, config));

}

/**

* Create with default configuration

*/

public <T> T createCached(T aiService) {

return createCached(aiService, SemanticCacheConfig.builder().build());

}

private Class<?>[] getAllInterfaces(Class<?> clazz) {

java.util.Set<Class<?>> interfaces = new java.util.HashSet<>();

collectInterfaces(clazz, interfaces);

return interfaces.toArray(new Class<?>[0]);

}

private void collectInterfaces(Class<?> clazz, java.util.Set<Class<?>> interfaces) {

if (clazz == null || clazz == Object.class) {

return;

}

for (Class<?> iface : clazz.getInterfaces()) {

interfaces.add(iface);

collectInterfaces(iface, interfaces);

}

collectInterfaces(clazz.getSuperclass(), interfaces);

}

/**

* Get access to the cache store for management operations

*/

public SemanticCacheStore getCacheStore() {

return cacheStore;

}

/**

* Get cache statistics

*/

public CacheStatistics getStatistics() {

return cacheStore.getStatistics();

}

}The factory:

Gathers all implemented interfaces: Recursively collects all interfaces from the service class, its superclasses, and nested interfaces using getAllInterfaces() to ensure the proxy implements the complete interface hierarchy.

Wraps the service in a dynamic proxy: Creates a Java dynamic proxy using Proxy.newProxyInstance() that implements all gathered interfaces and delegates method invocations to the SemanticCacheInvocationHandler.

Injects your configuration: Passes the SemanticCacheConfig to the invocation handler and injects the SemanticCacheStore (via CDI) to provide the caching infrastructure. Also provides a convenience method to create cached services with default configuration.

Provides access to cache management: Exposes methods to access the underlying cache store and statistics for monitoring and management operations.

This makes the caching layer reusable across multiple AI services. The factory pattern with generics allows any AI service type to be wrapped with semantic caching, requiring only a call to createCached() with the service instance and configuration.

CacheSetup: Embedding Model Registration

Registers embedding models by name and setting up the database.

The database table stores the prompt, hash, embedding vector, response, and TTL.

The HNSW index gives fast semantic similarity search.

Create file: src/main/java/com/example/ai/cache/config/CacheSetup.java

package com.example.ai.cache.config;

import java.sql.Connection;

import java.sql.SQLException;

import java.sql.Statement;

import com.example.ai.cache.store.PgVectorSemanticCacheStore;

import dev.langchain4j.model.embedding.EmbeddingModel;

import io.agroal.api.AgroalDataSource;

import io.quarkus.logging.Log;

import io.quarkus.runtime.StartupEvent;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.enterprise.event.Observes;

import jakarta.inject.Inject;

@ApplicationScoped

public class CacheSetup {

@Inject

PgVectorSemanticCacheStore cacheStore;

@Inject

EmbeddingModel embeddingModel; // Injected from LangChain4j configuration

@Inject

AgroalDataSource dataSource;

void onStart(@Observes StartupEvent event) {

// Initialize database schema

initializeDatabaseSchema();

// Register embedding models

cacheStore.registerEmbeddingModel(”default”, embeddingModel);

}

private void initializeDatabaseSchema() {

try (Connection conn = dataSource.getConnection();

Statement stmt = conn.createStatement()) {

// Create vector extension

stmt.execute(”CREATE EXTENSION IF NOT EXISTS vector”);

Log.info(”Created vector extension (if not exists)”);

// Create semantic_cache table

String createTableSql = “”“

CREATE TABLE IF NOT EXISTS semantic_cache (

id SERIAL PRIMARY KEY,

prompt_hash VARCHAR(64) NOT NULL,

prompt TEXT NOT NULL,

llm_string TEXT NOT NULL,

embedding vector(384),

response_json JSONB NOT NULL,

ttl_seconds INTEGER,

created_at TIMESTAMP DEFAULT NOW(),

CONSTRAINT uk_prompt_llm UNIQUE (prompt_hash, llm_string)

)

“”“;

stmt.execute(createTableSql);

Log.info(”Created semantic_cache table (if not exists)”);

// Create indexes

try {

stmt.execute(”CREATE INDEX IF NOT EXISTS idx_prompt_hash ON semantic_cache(prompt_hash, llm_string)”);

Log.info(”Created idx_prompt_hash index (if not exists)”);

} catch (SQLException e) {

// Index might already exist, log and continue

Log.debug(”Index idx_prompt_hash may already exist: “ + e.getMessage());

}

try {

stmt.execute(”“”

CREATE INDEX IF NOT EXISTS idx_embedding_hnsw ON semantic_cache

USING hnsw (embedding vector_cosine_ops)

“”“);

Log.info(”Created idx_embedding_hnsw index (if not exists)”);

} catch (SQLException e) {

// Index might already exist, log and continue

Log.debug(”Index idx_embedding_hnsw may already exist: “ + e.getMessage());

}

// Create the is_expired function

createIsExpiredFunction(conn);

} catch (SQLException e) {

Log.error(”Error initializing database schema”, e);

}

}

private void createIsExpiredFunction(Connection conn) {

String sql = “”“

CREATE OR REPLACE FUNCTION is_expired(created TIMESTAMP, ttl INT)

RETURNS BOOLEAN AS $$

BEGIN

RETURN ttl IS NOT NULL AND created + (ttl || ‘ seconds’)::INTERVAL < NOW();

END;

$$ LANGUAGE plpgsql IMMUTABLE

“”“;

try (Statement stmt = conn.createStatement()) {

stmt.execute(sql);

Log.info(”Created is_expired function successfully”);

} catch (SQLException e) {

Log.error(”Error creating is_expired function”, e);

}

}

}Integrating With a Real AI Service

Now it’s time to create the AIService we want to invoke.

Create file: src/main/java/com/example/ai/CustomerSupportAgent.java

package com.example.ai;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import jakarta.enterprise.context.ApplicationScoped;

@RegisterAiService

@ApplicationScoped

interface CustomerSupportAgent {

@SystemMessage(”You are a helpful customer support agent.”)

String chat(@UserMessage String userMessage);

String analyze(String content);

}And a corresponding REST endpoint so we can test all this:

Create file: src/main/java/com/example/ai/CustomerSupportResource.java

package com.example.ai;

import java.time.Duration;

import com.example.ai.cache.CacheStatistics;

import com.example.ai.cache.CachedAiServiceFactory;

import com.example.ai.cache.SemanticCacheConfig;

import io.quarkus.runtime.StartupEvent;

import jakarta.enterprise.event.Observes;

import jakarta.inject.Inject;

import jakarta.ws.rs.DELETE;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path(”/support”)

@Produces(MediaType.APPLICATION_JSON)

public class CustomerSupportResource {

@Inject

CustomerSupportAgent rawAgent;

@Inject

CachedAiServiceFactory cacheFactory;

private CustomerSupportAgent cachedAgent;

void onStart(@Observes StartupEvent event) {

// Create cached wrapper on startup

SemanticCacheConfig config = SemanticCacheConfig.builder()

.enabled(true)

.similarityThreshold(0.90)

.ttl(Duration.ofMinutes(30))

.strategy(SemanticCacheConfig.CacheStrategy.HYBRID)

.embeddingModelName(”default”)

.build();

this.cachedAgent = cacheFactory.createCached(rawAgent, config);

}

@POST

@Path(”/chat”)

public String chat(String message) {

return cachedAgent.chat(message);

}

@POST

@Path(”/analyze”)

public String analyze(String content) {

return cachedAgent.analyze(content);

}

@Path(”/cache/stats”)

@GET

public CacheStatistics.StatisticsSnapshot getStats() {

return cacheFactory.getStatistics().getSnapshot();

}

@DELETE

@Path(”/cache/clear”)

public void clearCache() {

cacheFactory.getCacheStore().clear();

}

}You now have full semantic caching without modifying your AI service.

Verification and What to Expect

Start Quarkus:

quarkus devUse three very similar requests. In this example, I am asking about the German president with slight wording differences to verify exact and semantic matching.

curl -X POST localhost:8080/support/chat -d “Who is president of Germany??”Cache miss (first request, cache empty)

Query sent to AI service; response cached

“As of my last update in October 2021, the President of Germany is Frank-Walter Steinmeier...”

curl -X POST localhost:8080/support/chat -d “Who is president of Germany??”Exact match hit

Exact match found (normalized/hash match)

Same cached response returned

curl -X POST localhost:8080/support/chat -d “Who is the president of Germany??”Semantic match hit

No exact match; semantic similarity (≥0.90) found

Same cached response returned

curl localhost:8080/support/cache/stats | jqThe cache statistics show:

{

“totalHits”: 3,

“totalMisses”: 1,

“exactMatchHits”: 1,

“semanticMatchHits”: 2,

“totalEntries”: 1,

“hitRate”: 0.75,

“averageLookupTimeMs”: 73,

“averageStoreTimeMs”: 20,

“startTime”: “2025-11-15T07:41:53.743812Z”,

“uptime”: 105.788598000,

“totalRequests”: 4

}Cache efficiency:

Hit rate: 75% (3 hits out of 4 requests)

1 exact match, 2 semantic matches

Only 1 unique entry stored

Performance:

Average lookup time: 73ms

Average store time: 20ms

Cache lookups are faster than AI service calls

The semantic cache is working as expected:

Exact matching handles case variations

Semantic matching finds similar questions with different wording

The HYBRID strategy (exact first, then semantic) is effective

The 0.90 similarity threshold correctly identifies semantically equivalent queries

The cache reduces redundant AI service calls while maintaining response quality

The system successfully cached one response and reused it for three semantically equivalent queries, demonstrating effective semantic caching.

Play with:

similarity threshold

TTL

changing the prompt slightly

adding per-user keying

Extended Notes and Considerations

Semantic caching is powerful, but it’s not a free lunch. Once you look closer, several practical challenges appear around performance, accuracy, and maintainability.

LLM caching adds its own constraints. Embeddings are expensive to compute, lookups hit the database frequently, and similarity thresholds require tuning. The cache becomes more than a shortcut: it becomes an architectural component with trade-offs you need to understand.

A few things to keep in mind. In particularly looking at this short (!) example:

Performance.

I did implement the Embedding generation synchronously and let it happens on every lookup. Caching embeddings, avoiding duplicate vector bindings, and improving vector serialization can reduce overhead. Every DB operation is blocking, so adding async paths or batching can make the system smoother under load.

Accuracy.

Semantic matches rely on embeddings. Changes in the embedding model, or using the wrong vector dimension in pgvector, will break cache consistency. Type information is currently lost on deserialization, so storing metadata or using a mapping strategy would help to maintain correctness.

Concurrency and reliability.

Statistics updates and entry counting are not fully synchronized. Store operations aren’t transactional. Adding timeouts, retry logic for transient DB errors, or even a circuit breaker protects the cache layer from cascading failures when the database struggles.

System Prompts.

We currently ignore @SystemMessage content when building cache keys. This is fine for simple demos, but in real applications the system prompt shapes the model’s behavior as much as the user prompt. If your system messages change, persona, rules, constraints, the cached responses may become invalid. A robust cache should incorporate system prompts (or a hashed representation) into the identity key.

Scalability.

The cache is tied to a single PostgreSQL instance. A future improvement could introduce multi-level caching: in-memory for ultra-fast lookups, Postgres for semantic queries, and a distributed cache like Redis for horizontal scale.

Invalidation and freshness.

TTL-based cleanup works, but cold starts and model changes still require manual cache warming or purge operations. Per-user caching avoids cross-contamination, but global prompts (“refund policy”) could benefit from a shared layer.

Security and robustness.

Adding validation for prompts and response sizes, plus sanitizing vector serialization inputs, keeps the cache safe and predictable.

In short: semantic caching works, but it has edges. Improving async behavior, input validation, error handling, and embedding reuse would make the system faster and safer. These refinements aren’t mandatory for learning, but they are useful exercises once you understand how the core mechanics work.

Variations and Extensions

A few interesting ideas to try next:

Multilevel Cache

Fast in-memory cache → semantic pgvector cache → LLM.

Cache Warming

Pre-seed common prompts at startup or deployment.

Batch Semantic Retrieval

Search for top-k similar prompts, not just the closest.

Hybrid Per-User and Global Cache

Some prompts (like “What is your refund policy?”) are global.

Others are user-specific.

Semantic caching turns your Quarkus + LangChain4j AI services into faster, smarter, more predictable systems. Happy caching :-)

How do we externalize the system message? In a way it helps to make it configurable without changes to code and deployment. I tried to intercept the requests to RegisterAiService similar to the one done here but unfortunately langchain does not allow modification to Chat message request. Is System message provider a viable option? Appreciate if you could share your insight