Zero Trust for Java Architects: How Quarkus Secures APIs, Kafka, and AI

A practical guide for enterprise Java teams to embed Zero Trust into REST, messaging, and LLM-powered services with Quarkus.

The days of relying on firewalls and VPNs as the single layer of defense are over. Applications no longer sit safely inside a corporate data center. Hybrid cloud, SaaS integrations, and edge deployments have erased the old perimeter. What remains is a simple but powerful principle: never trust by default, always verify.

This principle, known as Zero Trust, changes how Java architects must design systems. It means every API call, every service-to-service request, every event on a broker, and even every prompt to an AI model needs identity, policy enforcement, and observability. Zero Trust is not a new product to buy. It is a design discipline that touches all parts of your architecture.

Quarkus offers a foundation that allows architects to bring Zero Trust into reality. Its extensions for OpenID Connect, JWT, Vault, Kafka, and LangChain4j provide the building blocks to integrate identity, secrets, and audit into applications from the start.

Identity Everywhere

The first step of Zero Trust is recognizing that identity is the new perimeter. No request, not even one traveling inside your Kubernetes cluster, should be considered safe without authentication. In practical terms, this means every call to your Quarkus services must carry a token, and every service must validate it before processing.

Quarkus integrates seamlessly with identity providers through the OIDC extension and SmallRye JWT. This allows you to design systems where no endpoint can be reached without verifiable credentials.

The architectural rule is simple: treat all traffic as untrusted until identity has been proven.

Least Privilege Access

Once identity is established, the question becomes: what should this caller be allowed to do? Zero Trust requires strict enforcement of least privilege. A user or service may have access to one operation but not another, and those permissions may change depending on context.

Quarkus makes this explicit through annotations like @RolesAllowed. For more dynamic scenarios, you can integrate with Keycloak authorization services or an external policy engine such as OPA. The important point is that access rules are not hidden in the network but expressed clearly in the code and policy definitions.

This approach reduces the blast radius of any compromise and ensures that developers see and test the security rules as part of their normal workflow.

Secure Service Communication

Zero Trust also changes how internal communication is handled. The traditional view that “inside is safe” no longer applies. Service-to-service traffic must be encrypted and authenticated.

With Quarkus, you can use OIDC client filters to automatically acquire and propagate tokens on outgoing REST calls. That way, when one service calls another, the identity of the original user is still known and verified downstream. Combined with TLS or mutual TLS, this ensures that compromised network access does not equate to compromised business logic.

The guiding principle is that every hop, even inside the cluster, must be treated like an edge.

Secrets and Configuration

Another area where Zero Trust has direct implications is secret management. Storing passwords, API keys, or tokens in configuration files or container images violates the principle of “never trust.” Those secrets may leak, be copied, or linger after they should have been rotated.

Quarkus integrates directly with HashiCorp Vault and Kubernetes secrets. Instead of static credentials, your services retrieve what they need securely at runtime. This reduces exposure and aligns with compliance requirements that demand secret rotation and auditing.

Observability and Auditability

Zero Trust is not complete without visibility. If you cannot trace who accessed what, when, and why, you cannot verify that your controls are effective.

Quarkus supports structured logging and integrates with OpenTelemetry. This makes it possible to correlate a user action from the first request, through multiple microservices, to the final database call. Security events, such as denied access, can be logged in JSON format and aggregated into systems like ELK or Loki.

The rule is straightforward: if you cannot explain how a request moved through your system, you do not have Zero Trust.

Zero Trust and Messaging

Event-driven systems introduce their own challenges. In many enterprises, Kafka or RabbitMQ form the backbone for communication between services. These brokers often carry sensitive events such as financial transactions or operational signals. In traditional setups, the broker is assumed to be “inside” and therefore trusted. In a Zero Trust world, that assumption is dangerous.

Producers and consumers must authenticate with verifiable identity. Kafka supports SASL, mTLS, and OIDC token-based authentication. Authorization must be fine-grained, ensuring that a service can only publish or consume from specific topics. All traffic should be encrypted in transit, and sensitive payloads may require field-level encryption in addition to TLS.

Quarkus integrates with Kafka via SmallRye Reactive Messaging. Architects can configure connectors to use token-based authentication, enforce naming conventions tied to business domains, and wrap consumption logic with structured logs.

The principle here mirrors the earlier sections: treat your message broker like a public API. Every publish and every consume action must be authenticated, authorized, and auditable.

Zero Trust and LLMs

Large Language Models (LLMs) are now entering enterprise systems, but they behave very differently from APIs or message queues. They are probabilistic, not deterministic, and they can leak sensitive data if not controlled. Under Zero Trust, architects must treat LLMs as untrusted components and design strong boundaries around them.

The first boundary is at the service edge. Every request to an AI capability inside your Quarkus application should be authenticated with OIDC and authorized against roles or attributes. This is where you decide which users are even allowed to call an AI service. The Quarkus Security guide provides the foundation for this gate.

The second boundary is data classification. Before passing any input to a model, data must be filtered and masked according to policy. LangChain4j provides guardrails that can enforce input restrictions and output sanitization directly in your application code.

The third boundary is the call to the model provider itself. Most providers authenticate at the service level, not the user level.

OpenAI requires an API key configured in Quarkus properties.

Azure OpenAI supports Microsoft Entra ID for managed identity authentication.

Amazon Bedrock relies on AWS IAM roles.

Ollama, typically used locally, can be secured by placing it behind a proxy and injecting authorization headers via a

ModelAuthProvider.

The key insight is that you do not forward end-user tokens to these providers. Instead, your service authenticates itself to the provider, and user-level policies are enforced inside your Quarkus boundary.

Finally, observability applies here as well. Prompts, retrieved documents in RAG pipelines, and model outputs should be logged and correlated with the initiating user. Quarkus supports this through its OpenTelemetry integration, making AI calls traceable like any other business transaction.

The guiding principle is clear: LLMs can be useful collaborators, but they cannot be trusted with unrestricted data or access. They must operate inside boundaries defined by authentication, authorization, guardrails, and audit.

Visualizing Zero Trust Across Services

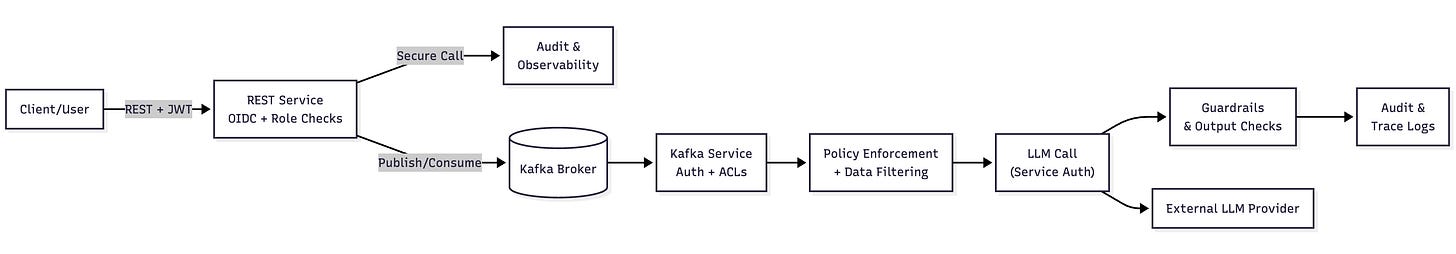

It helps to see how these principles come together in a structured application. Consider a BCE-style system with two Quarkus services: one exposing REST APIs, the other consuming and producing Kafka messages while also embedding an LLM component.

Each boundary introduces security checkpoints. The REST service validates tokens and enforces roles. The Kafka service authenticates producers and consumers with ACLs and TLS. Before calling the LLM, data is filtered, guardrails are applied, and the service authenticates itself to the provider. Every step is logged for observability.

Here’s a diagram that highlights the critical control points:

This horizontal flow makes it clear that Zero Trust is not about a single control at the perimeter but about layered enforcement at every boundary.

The Challenge at Scale

Implementing Zero Trust in theory is easy. At enterprise scale, it is hard. Architects must balance productivity with policy, centralization with local enforcement, and performance with security overhead.

Quarkus helps by offering a consistent programming model and integrations that make Zero Trust practical. Security is not bolted on later but included in the way developers build services, connect to brokers, and even experiment with AI.

Strategic Takeaways

Zero Trust is not a slogan. It is a discipline. For Java architects, the path forward is clear:

Treat identity as the new perimeter.

Enforce least privilege across APIs, services, and streams.

Secure secrets and ensure observability by design.

Extend the same principles to LLMs and AI pipelines.

With Quarkus, Zero Trust is not an aspiration. It becomes part of the fabric of your enterprise applications.