Your Out-of-Office Reply, Generated Locally with Java

Building a Quarkus API with LangChain4j and Ollama when email is the last thing you want to write

December 23 in Germany is the day where you promise yourself you will “just quickly” do two things, and then you spend the next six hours sprinting between school, supermarket, and last-minute gift logistics.

This tutorial is built for that day.

We’ll build a tiny Quarkus API that generates out-of-office messages in different tones, using LangChain4j with a local Ollama model: gpt-oss:20b.

No cloud calls. No keys. Just your laptop and a working endpoint.

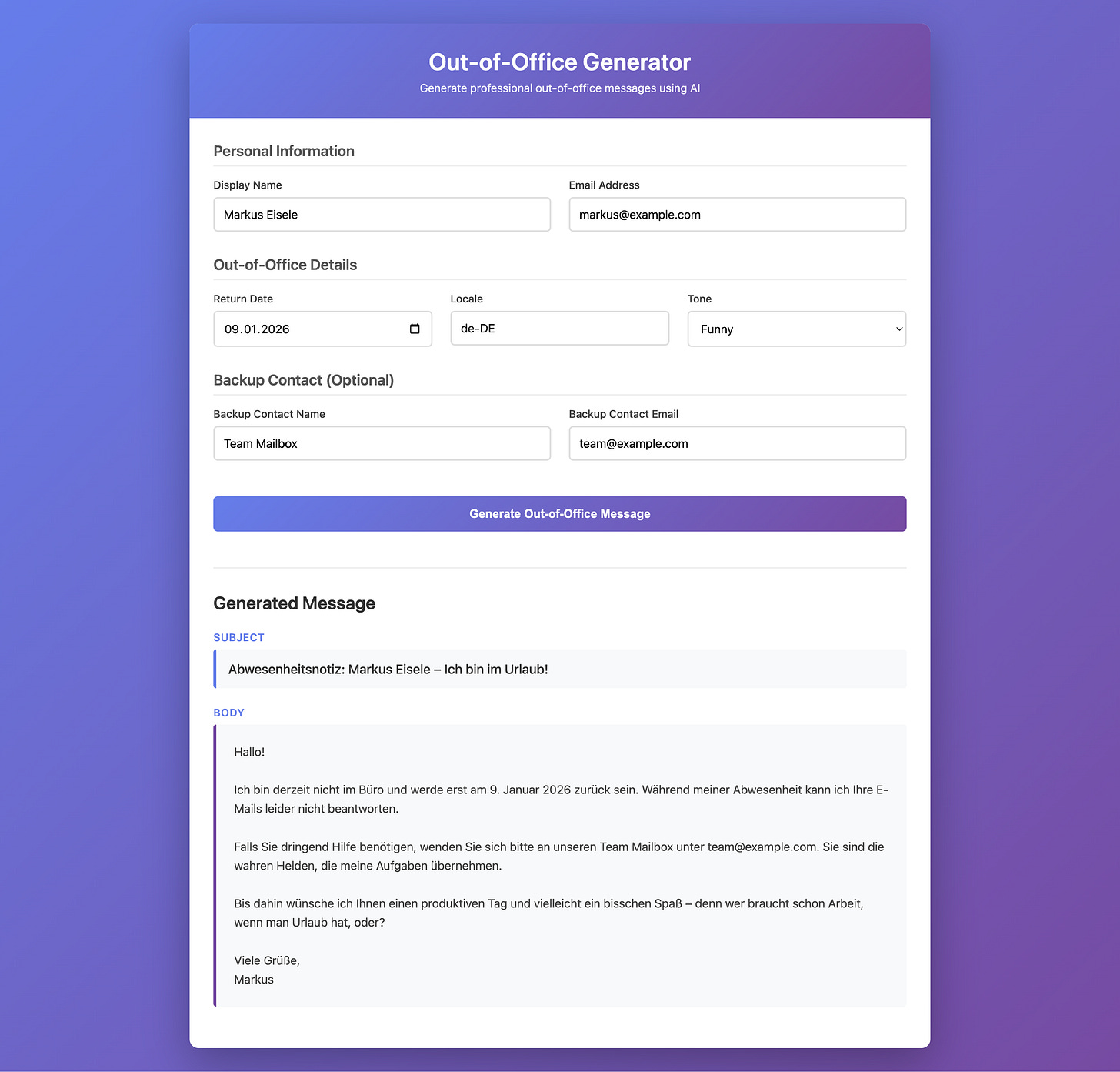

What we’ll build

A small service with:

POST /api/oooto generate an OOO message as JSONGET /a minimal HTML form (Qute) so you can try it without curlSafe-ish output handling: we ask the model for JSON and validate what we got

Prerequisites

Java 21

Quarkus CLI

Ollama installed and running

Enough RAM for

gpt-oss:20b(it’s a big model)

Ollama model:

ollama pull gpt-oss:20bQuarkus versions we’ll use:

Quarkus 3.30.3 (current as of Dec 10, 2025)

Quarkus LangChain4j Ollama extension 1.4.2

Create the Quarkus project

quarkus create app com.example:ooo-generator \

--java=21 \

--no-code

cd ooo-generatorThe quarkus create app syntax is documented in the Quarkus CLI guides. Learn more about the Quarkus CLI in my older post.

Now add extensions:

quarkus ext add \

quarkus-rest-jackson \

quarkus-qute \

rest-qute \

io.quarkiverse.langchain4j:quarkus-langchain4j-ollama \

quarkus-hibernate-validatorConfigure Ollama and the model

Change src/main/resources/application.properties:

# LangChain4j -> Ollama

quarkus.langchain4j.ollama.chat-model.model-name=gpt-oss:20b

quarkus.langchain4j.ollama.chat-model.temperature=0.2

# Local models can be slow on laptops

quarkus.langchain4j.timeout=90sImportant: this uses the native Ollama API (no /v1). Don’t accidentally point it at an OpenAI-compatible endpoint unless you mean to.

Define the API contract

Create src/main/java/com/example/ooo/api/OooRequest.java:

package com.example.ooo.api;

import java.time.LocalDate;

import jakarta.validation.constraints.Email;

import jakarta.validation.constraints.Future;

import jakarta.validation.constraints.NotBlank;

import jakarta.validation.constraints.NotNull;

public record OooRequest(

@NotBlank String displayName,

@Email @NotBlank String email,

@NotNull @Future LocalDate returnDate,

@NotBlank String locale,

@NotNull Tone tone,

String backupContactName,

@Email String backupContactEmail) {

public enum Tone {

FORMAL,

FRIENDLY,

DRY,

FUNNY

}

}Create src/main/java/com/example/ooo/api/OooResponse.java:

package com.example.ooo.api;

public record OooResponse(

String subject,

String body

) {}We’ll also add a small “raw” model output container:

Create src/main/java/com/example/ooo/llm/OooDraft.java:

package com.example.ooo.llm;

public record OooDraft(

String subject,

String body

) {}Create the LangChain4j AI service

We’ll ask the model for strict JSON. LangChain4j supports structured outputs via JSON schema in general, but providers differ and local models can still drift, so we’ll validate and parse defensively.

Create src/main/java/com/example/ooo/llm/OooWriter.java:

package com.example.ooo.llm;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService

public interface OooWriter {

@SystemMessage(”“”

You write out-of-office replies for enterprise email systems.

Rules:

- Output MUST be valid JSON.

- Output MUST contain exactly two top-level string fields: “subject” and “body”.

- Do not include markdown.

- Do not include explanations.

- Keep it realistic for corporate environments.

“”“)

@UserMessage(”“”

Create an out-of-office message.

Input:

- Name: {displayName}

- Email: {email}

- Return date (ISO): {returnDate}

- Locale: {locale}

- Tone: {tone}

- Backup contact name: {backupContactName}

- Backup contact email: {backupContactEmail}

Output JSON format:

{{”subject”: “...”, “body”: “...”}}

“”“)

String draft(

String displayName,

String email,

String returnDate,

String locale,

String tone,

String backupContactName,

String backupContactEmail);

}Implement the service layer with validation and parsing

Create src/main/java/com/example/ooo/service/OooService.java:

package com.example.ooo.service;

import java.time.format.DateTimeFormatter;

import java.util.Objects;

import com.example.ooo.api.OooRequest;

import com.example.ooo.api.OooResponse;

import com.example.ooo.llm.OooDraft;

import com.example.ooo.llm.OooWriter;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class OooService {

private static final DateTimeFormatter ISO = DateTimeFormatter.ISO_LOCAL_DATE;

@Inject

OooWriter writer;

@Inject

ObjectMapper objectMapper;

public OooResponse generate(OooRequest request) {

String raw = writer.draft(

request.displayName(),

request.email(),

request.returnDate().format(ISO),

request.locale(),

request.tone().name(),

emptyToNull(request.backupContactName()),

emptyToNull(request.backupContactEmail()));

OooDraft draft = parseOrThrow(raw);

String subject = sanitizeLine(draft.subject());

String body = sanitizeBody(draft.body());

return new OooResponse(subject, body);

}

private OooDraft parseOrThrow(String raw) {

try {

OooDraft draft = objectMapper.readValue(raw, OooDraft.class);

if (draft.subject() == null || draft.subject().isBlank()) {

throw new IllegalArgumentException(”Model output missing ‘subject’”);

}

if (draft.body() == null || draft.body().isBlank()) {

throw new IllegalArgumentException(”Model output missing ‘body’”);

}

return draft;

} catch (JsonProcessingException e) {

throw new IllegalArgumentException(”Model output was not valid JSON: “ + raw, e);

}

}

private static String sanitizeLine(String s) {

String v = Objects.toString(s, “”).trim();

v = v.replace(”\r”, “”).replace(”\n”, “ “);

return v.length() > 140 ? v.substring(0, 140).trim() : v;

}

private static String sanitizeBody(String s) {

String v = Objects.toString(s, “”).trim();

v = v.replace(”\r”, “”);

if (v.length() > 2000) {

v = v.substring(0, 2000).trim();

}

return v;

}

private static String emptyToNull(String s) {

if (s == null)

return null;

String t = s.trim();

return t.isEmpty() ? null : t;

}

}This is intentionally boring code. On December 23, boring is good.

Expose the REST endpoint

Create src/main/java/com/example/ooo/api/OooResource.java:

package com.example.ooo.api;

import com.example.ooo.service.OooService;

import jakarta.inject.Inject;

import jakarta.validation.Valid;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path(”/api/ooo”)

@Consumes(MediaType.APPLICATION_JSON)

@Produces(MediaType.APPLICATION_JSON)

public class OooResource {

@Inject

OooService service;

@POST

public OooResponse generate(@Valid OooRequest request) {

return service.generate(request);

}

}Add a tiny Qute UI for quick testing

Add src/main/java/com/example/ooo/web/HomeResource.java:

package com.example.ooo.web;

import io.quarkus.qute.Template;

import io.quarkus.qute.TemplateInstance;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

@Path(”/”)

public class HomeResource {

@Inject

Template home;

@GET

public TemplateInstance page() {

return home.instance();

}

}

Add src/main/resources/templates/home.html:

<!doctype html>

<html lang=”en”>

<head>

<meta charset=”utf-8” />

<meta name=”viewport” content=”width=device-width,initial-scale=1” />

<title>OOO Generator</title>

<style>

body {

font-family: system-ui, sans-serif;

margin: 2rem;

max-width: 900px;

}

label {

display: block;

margin-top: 1rem;

}

input,

select {

width: 100%;

padding: .6rem;

font-size: 1rem;

}

button {

margin-top: 1.2rem;

padding: .8rem 1.2rem;

font-size: 1rem;

cursor: pointer;

}

pre {

white-space: pre-wrap;

padding: 1rem;

border: 1px solid #ddd;

border-radius: 8px;

}

</style>

</head>

<body>

<h1>Out-of-Office Generator</h1>

<p>

This calls a local Ollama model via Quarkus LangChain4j.

It generates JSON: subject + body.

</p>

<form id=”f”>

<label>Display name <input name=”displayName” value=”Markus Eisele” required></label>

<label>Email <input name=”email” value=”markus@example.com” required></label>

<label>Return date <input name=”returnDate” type=”date” required></label>

<label>Locale <input name=”locale” value=”de-DE” required></label>

<label>Tone

<select name=”tone”>

<option>FORMAL</option>

<option selected>FRIENDLY</option>

<option>DRY</option>

<option>FUNNY</option>

</select>

</label>

<label>Backup contact name <input name=”backupContactName” value=”Team Mailbox”></label>

<label>Backup contact email <input name=”backupContactEmail” value=”team@example.com”></label>

<button type=”submit”>Generate</button>

</form>

<h2>Result</h2>

<pre id=”out”>(nothing yet)</pre>

<script>

const form = document.getElementById(”f”);

const out = document.getElementById(”out”);

form.addEventListener(”submit”, async (e) => {

e.preventDefault();

out.textContent = “Calling the model...”;

const data = Object.fromEntries(new FormData(form).entries());

const res = await fetch(”/api/ooo”, {

method: “POST”,

headers: { “Content-Type”: “application/json” },

body: JSON.stringify(data)

});

const text = await res.text();

out.textContent = text;

});

</script>

</body>

</html>Run it

Start Ollama (if it’s not already running), then:

quarkus devOpen: http://localhost:8080/

Or call the API directly:

curl -s http://localhost:8080/api/ooo \

-H 'content-type: application/json' \

-d '{

"displayName":"Markus Eisele",

"email":"markus@example.com",

"returnDate":"2025-12-29",

"locale":”de-DE",

"tone":"FRIENDLY",

"backupContactName":"Team Mailbox",

"backupContactEmail":"team@example.com"

}' | jqExpected shape:

{

“subject”: “Abwesenheitsnotiz: Markus Eisele”,

“body”: “Hallo,\n\nvielen Dank für Ihre Nachricht. Ich bin derzeit nicht im Büro und werde am 29. Dezember 2025 zurück sein. In der Zwischenzeit steht Ihnen unser Team-Mailbox gerne zur Verfügung. Sie erreichen uns unter team@example.com.\n\nMit freundlichen Grüßen,\nMarkus Eisele”

}Production notes

Keep these in mind if you turn this into something real:

Local models can be slow. Give them time. That’s why we set quarkus.langchain4j.timeout=90s.

Never assume the model will obey formatting rules. We parse JSON and fail fast if it doesn’t. If you need higher reliability, consider JSON schema structured outputs and add retry logic when parsing fails.

Don’t expose this endpoint publicly as-is. It’s still an LLM behind an HTTP API. Put auth in front of it, rate limit it, and log carefully.

Summary

You now have a small Quarkus service that generates out-of-office replies using LangChain4j and a local Ollama model (gpt-oss:20b), plus a tiny Qute page for quick testing. It’s simple, fast to demo, and realistic enough to reuse later.

Now go fix the last-minute kid stuff, with one less email to write.